着色教程

Shadertoy Tutorial

一个大牛创作的一系列教程文章(官方网址),非常适合入门学习。可以配合着 Shadertoy 在线 GLSL ES 着色器工具一起学习。理论联系实践吗,得动手实验各种示例才有效果。另外还要参考 Inigo Quilez 这位大牛的博客,有大量关于着色方面的文章,并且他也是 shadertoy.com 的创始人之一,所以他在 Shadertoy 官网创作了大量示例程序,都是非常具有参考价值的。

这里我把这个教程复制下来了,之所以费那么大劲,原因有二:一是我非常喜欢个入门教程,示例短小精悍,简单易懂;二是鉴于某些不可说的原因,说不定这国外的网访问不了了。

教程目录

- Tutorial Part 1 - Intro

- Tutorial Part 2 - Circles and Animation

- Tutorial Part 3 - Squares and Rotation

- Tutorial Part 4 - Multiple 2D Shapes and Mixing

- Tutorial Part 5 - 2D SDF Operations and More 2D Shapes

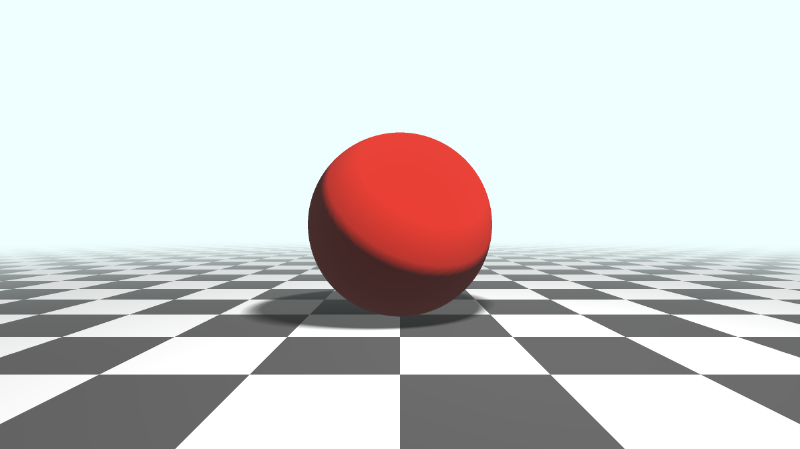

- Tutorial Part 6 - 3D Scenes with Ray Marching

- Tutorial Part 7 - Unique Colors and Multiple 3D Objects

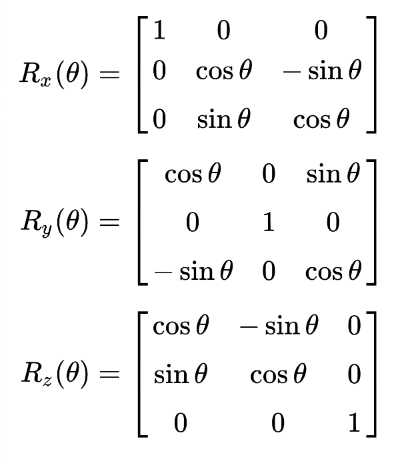

- Tutorial Part 8 - 3D Rotation

- Tutorial Part 9 - Camera Movement

- Tutorial Part 10 - Camera Model with a Lookat Point

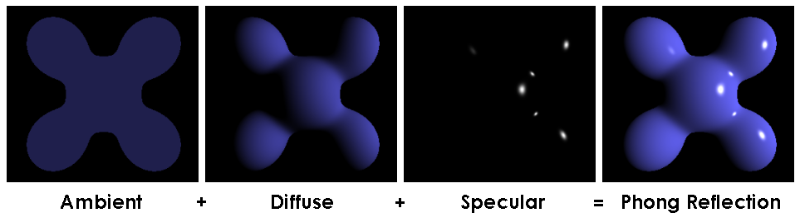

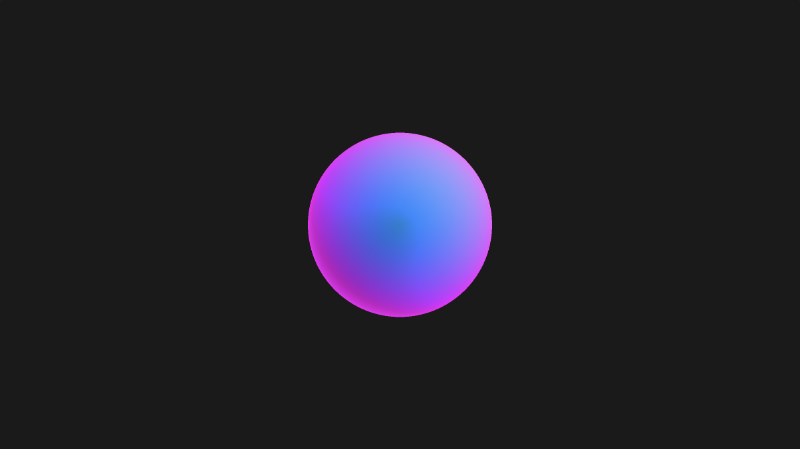

- Tutorial Part 11 - Phong Reflection Model

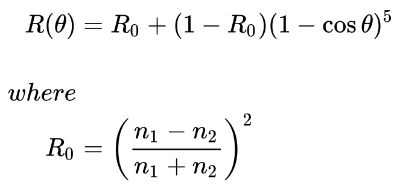

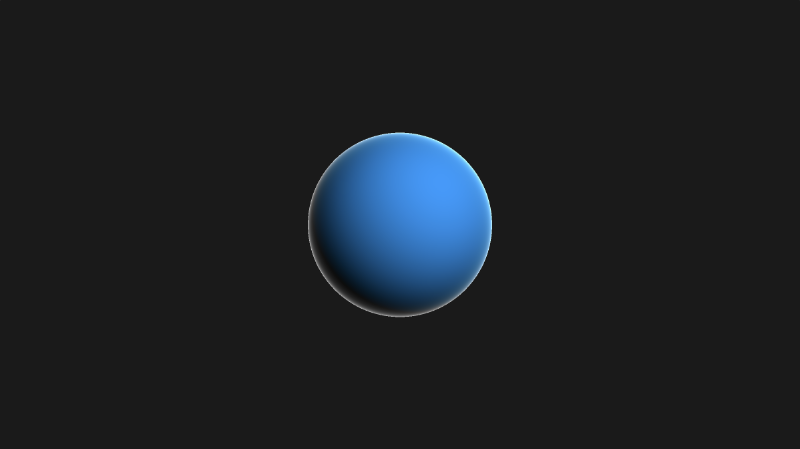

- Tutorial Part 12 - Fresnel and Rim Lighting

- Tutorial Part 13 - Shadows

- Tutorial Part 14 - SDF Operations

- Tutorial Part 15 - Channels, Textures, and Buffers

- Tutorial Part 16 - Cubemaps and Reflections

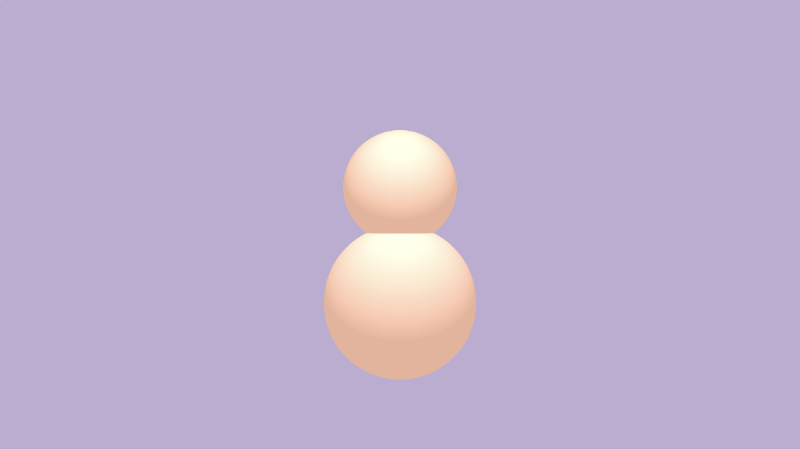

- Snowman Shader in Shadertoy

- Shader Resources

- Glow Shader in Shadertoy

Tutorial Part 1 - Intro

转自:https://inspirnathan.com/posts/47-shadertoy-tutorial-part-1/

Greetings, friends! I’ve recently been fascinated with shaders and how amazing they are. Today, I will talk about how we can create pixel shaders using an amazing online tool called Shadertoy, created by Inigo Quilez and Pol Jeremias, two extremely talented people.

What are Shaders?

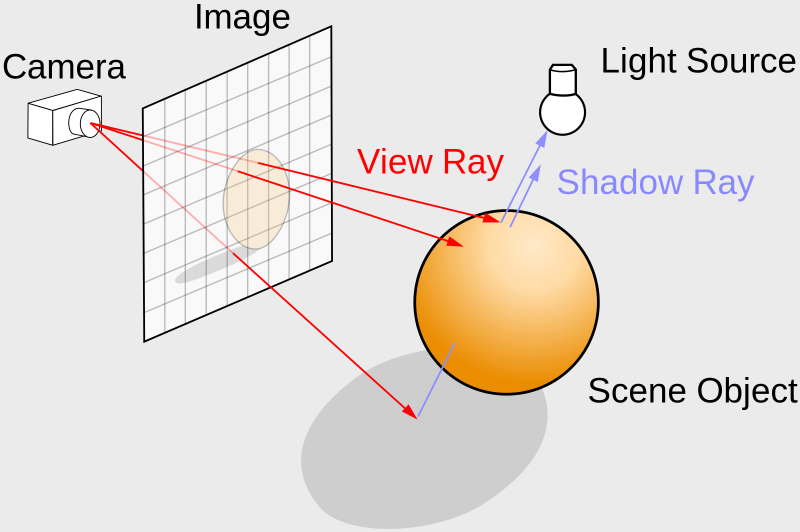

Shaders are powerful programs that were originally meant for shading objects in a 3D scene. Nowadays, shaders serve multiple purposes. Shader programs typically run on your computer’s graphics processing unit (GPU) where they can run in parallel.

TIP

Understanding that shaders run in parallel on your GPU is extremely important. Your program will independently run for every pixel in Shadertoy at the same time.

Shader languages such as the High-Level Shading Language (HLSL) and OpenGL Shading Language (GLSL) are the most common languages used to program the GPU’s rendering pipeline. These languages have syntax similar to the C programming language.

When you’re playing a game such as Minecraft, shaders are used to make the world seem 3D as you’re viewing it from a 2D screen (i.e. your computer monitor or your phone’s screen). Shaders can also drastically change the look of a game by adjusting how light interacts with objects or how objects are rendered to the screen. This YouTube video showcases 10 shaders that can make Minecraft look totally different and demonstrate the beauty of shaders.

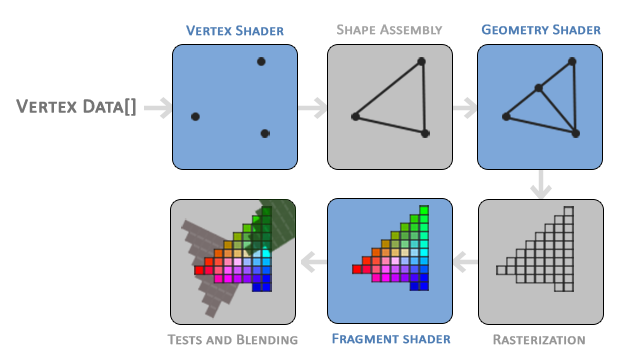

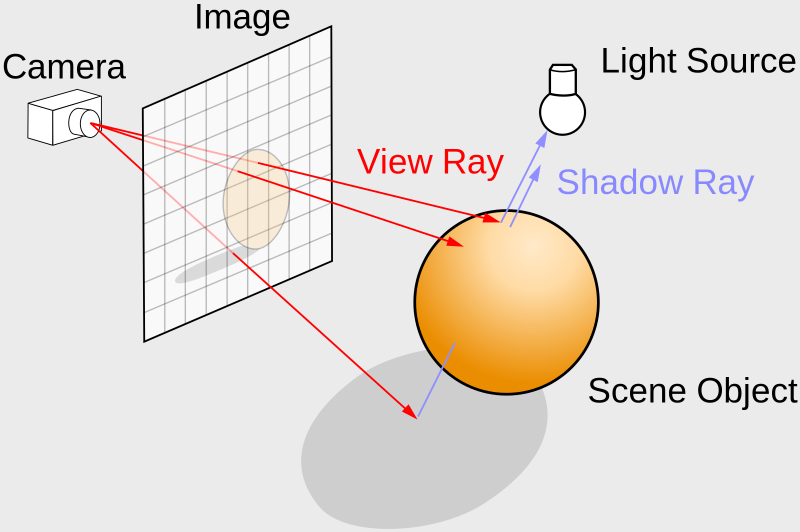

You’ll mostly see shaders come in two forms: vertex shaders and fragment shaders. The vertex shader is used to create vertices of 3D meshes of all kinds of objects such as sphere, cubes, elephants, protagonists of a 3D game, etc. The information from the vertex shader is passed to the geometry shader which can then manipulate these vertices or perform extra operations before the fragment shader. You typically won’t hear geometry shaders being discussed much. The final part of the pipeline is the fragment shader. The fragment shader calculates the final color of the pixel and determines if a pixel should even be shown to the user or not.

Stages of the graphics pipeline by Learn OpenGL

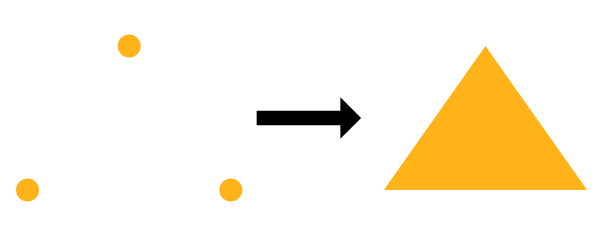

As an example, suppose we have a vertex shader that draws three points/vertices to the screen in the shape of a triangle. Once those vertices pass to the fragment shader, the pixel color between each vertex can be filled in automatically. The GPU understands how to interpolate values extremely well. Assuming a color is assigned to each vertex in the vertex shader, the GPU can interpolate colors between each vertex to fill in the triangle.

In game engines like Unity or Unreal, vertex shaders and fragment shaders are used heavily for 3D games. Unity provides an abstraction on top of shaders called ShaderLab, which is a language that sits on top of HLSL to help write shaders easier for your games. Additionally, Unity provides a visual tool called Shader Graph that lets you build shaders without writing code. If you search for “Unity shaders” on Google, you’ll find hundreds of shaders that perform lots of different functions. You can create shaders that make objects glow, make characters become translucent, and even create “image effects” that apply a shader to the entire view of your game. There are an infinite number of ways you can use shaders.

You may often hear fragment shaders be referred to as pixel shaders. The term, “fragment shader,” is more accurate because shaders can prevent pixels from being drawn to the screen. In some applications such as Shadertoy, you’re stuck drawing every pixel to the screen, so it makes more sense to call them pixel shaders in that context.

Shaders are also responsible for rendering the shading and lighting in your game, but they can be used for more than that. A shader program can run on the GPU, so why not take advantage of the parallelization it offers? You can create a compute shader that runs heavy calculations in the GPU instead of the CPU. In fact, Tensorflow.js takes advantage of the GPU to train machine learning models faster in the browser.

Shaders are powerful programs indeed!

What is Shadertoy?

In the next series of posts, I will be talking about Shadertoy. Shadertoy is a website that helps users create pixel shaders and share them with others, similar to Codepen with HTML, CSS, and JavaScript.

TIP

When following along this tutorial, please make sure you’re using a modern browser that supports WebGL 2.0 such as Google Chrome.

Shadertoy leverages the WebGL API to render graphics in the browser using the GPU. WebGL lets you write shaders in GLSL and supports hardware acceleration. That is, you can leverage the GPU to manipulate pixels on the screen in parallel to speed up rendering. Remember how you had to use ctx.getContext('2d') when working with the HTML Canvas API? Shadertoy uses a canvas with the webgl context instead of 2d, so you can draw pixels to the screen with higher performance using WebGL.

WARNING

Although Shadertoy uses the GPU to help boost rendering performance, your computer may slow down a bit when opening someone’s Shadertoy shader that performs heavy calculations. Please make sure your computer’s GPU can handle it, and understand that it may drain a device’s battery fairly quickly.

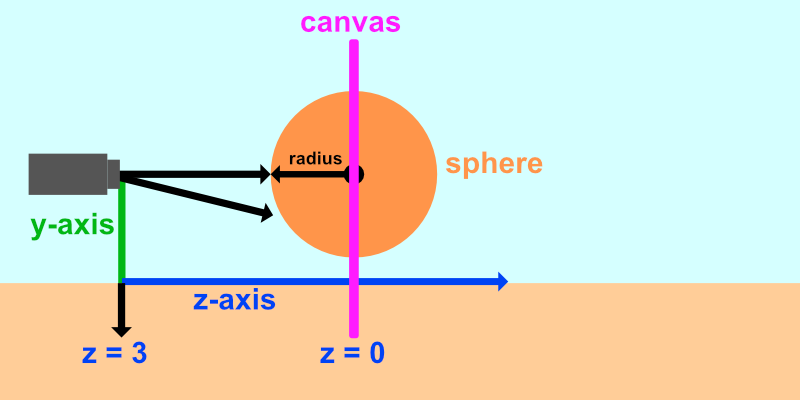

Modern 3D game engines such as Unity and the Unreal Engine and 3D modelling software such as Blender run very quickly because they use both a vertex and fragment shader, and they perform a lot of optimizations for you. In Shadertoy, you don’t have access to a vertex shader. You have to rely on algorithms such as ray marching and signed distance fields/functions (SDFs) to render 3D scenes which can be computationally expensive.

Please note that writing shaders in Shadertoy does not guarantee they will work in other environments such as Unity. You may have to translate the GLSL code to syntax supported by your target environment such as HLSL. Shadertoy also provides global variables that may not be supported in other environments. Don’t let that stop you though! It’s entirely possible to make adjustments to your Shadertoy code and use them in your games or modelling software. It just requires a bit of extra work. In fact, Shadertoy is a great way to experiment with shaders before using them in your preferred game engine or modelling software.

Shadertoy is a great way to practice creating shaders with GLSL and helps you think more mathematically. Drawing 3D scenes requires a lot of vector arithmetic. It’s intellectually stimulating and a great way to show off your skills to your friends. If you browse across Shadertoy, you’ll see tons of beautiful creations that were drawn with just math and code! Once you get the hang of Shadertoy, you’ll find it’s really fun!

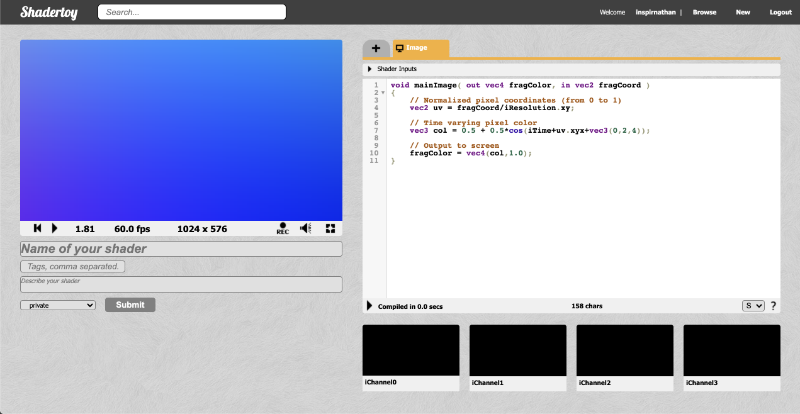

Introduction to Shadertoy

Shadertoy takes care of setting up an HTML canvas with WebGL support, so all you have to worry about is writing the shader logic in the GLSL programming language. As a downside, Shadertoy doesn’t let you write vertex shaders and only lets you write pixel shaders. It essentially provides an environment for experimenting with the fragment side of shaders, so you can manipulate all pixels on the canvas in parallel.

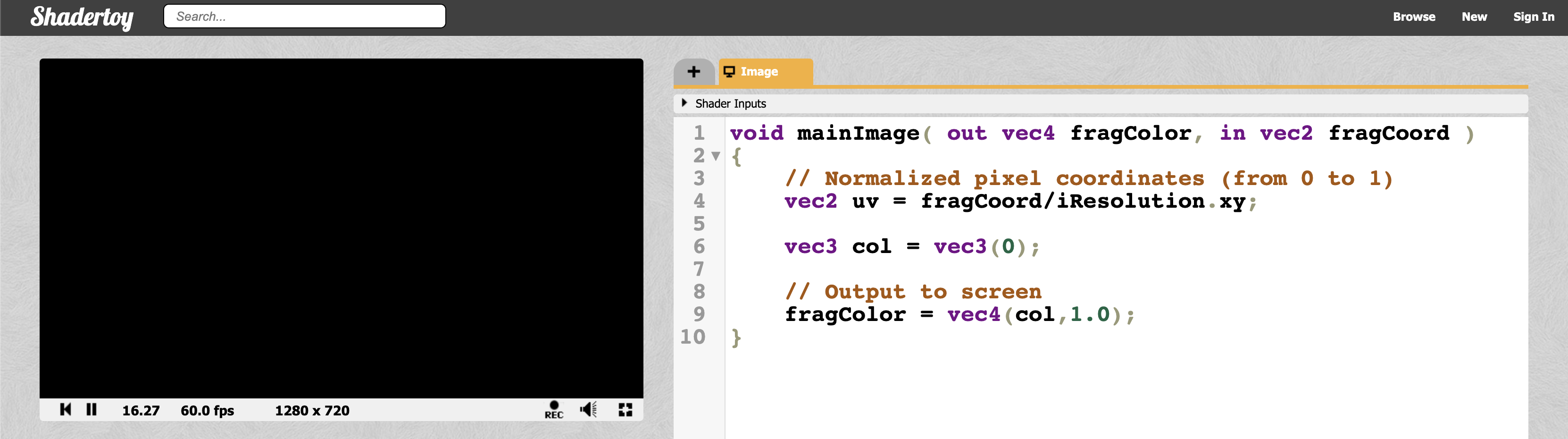

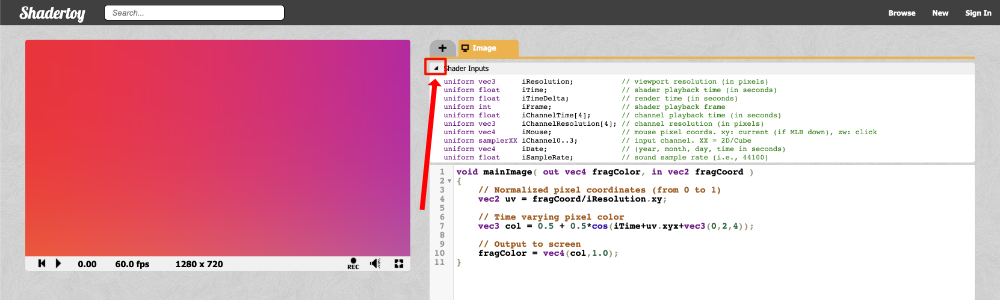

On the top navigation bar of Shadertoy, you can click on New to start a new shader.

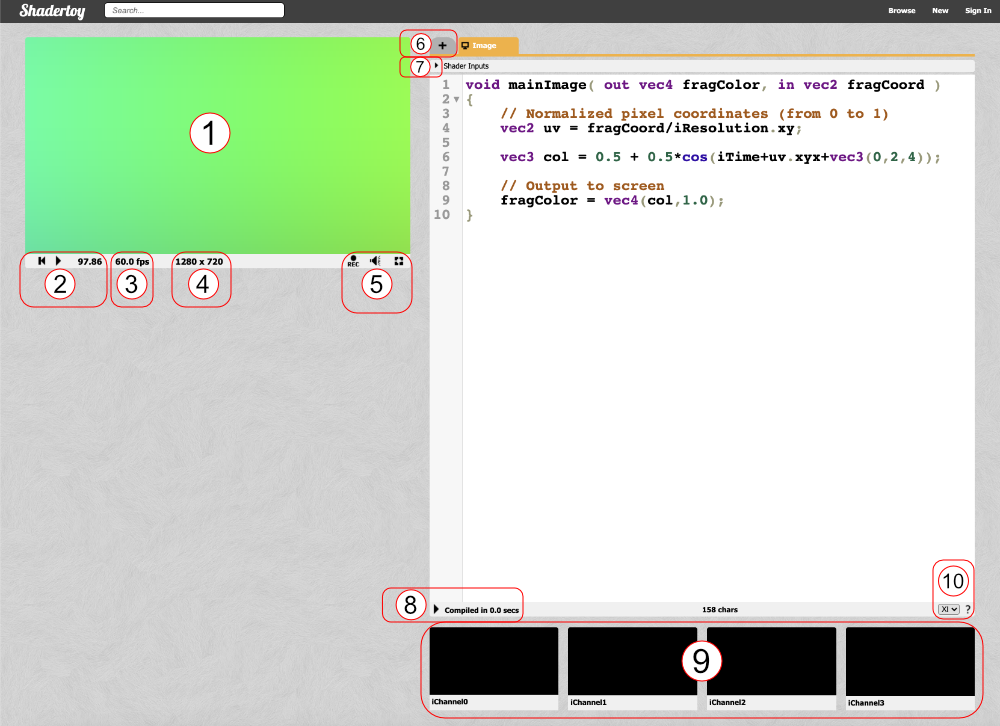

Let’s analyze everything we see on the screen. Obviously, we see a code editor on the right-hand side for writing our GLSL code, but let me go through most of the tools available as they are numbered in the image above.

- The canvas for displaying the output of your shader code. Your shader will run for every pixel in the canvas in parallel.

- Left: rewind time back to zero. Center: play/pause the shader animations. Right: Time in seconds since page loaded.

- The frames per second (fps) will let you know how well your computer can handle the shader. Typically runs around 60fps or lower.

- Canvas resolution in width by height. These values are given to you in the “iResolution” global variable.

- Left: record an html video by pressing it, recording, and pressing it again. Middle: Adjust volume for audio playing in your shader. Right: Press the symbol to expand the canvas to full screen mode.

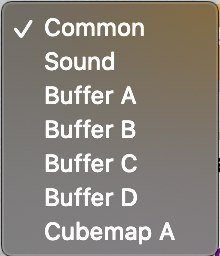

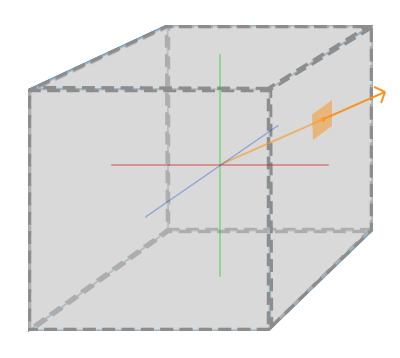

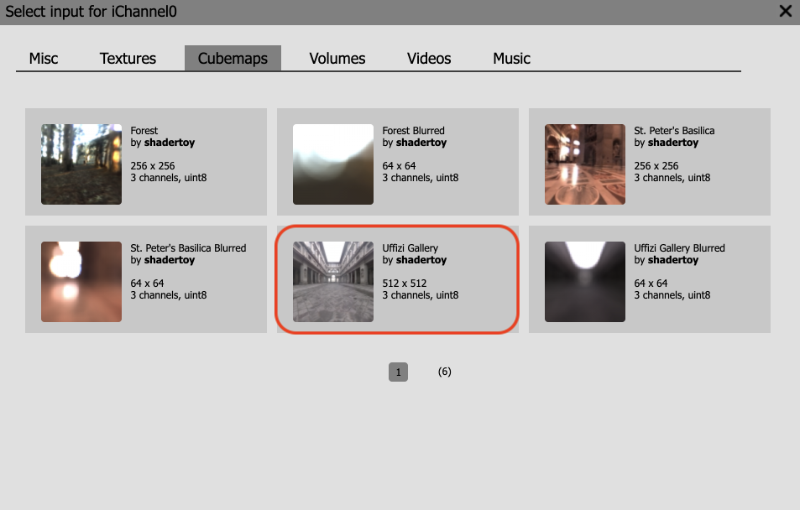

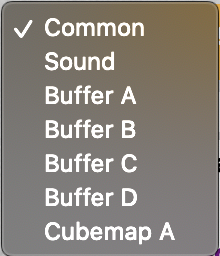

- Click the plus icon to add additional scripts. The buffers (A, B, C, D) can be accessed using “channels” Shadertoy provides. Use “Common” to share code between scripts. Use “Sound” when you want to write a shader that generates audio. Use “Cubemap” to generate a cubemap.

- Click on the small arrow to see a list of global variables that Shadertoy provides. You can use these variables in your shader code.

- Click on the small arrow to compile your shader code and see the output in the canvas. You can use Alt+Enter or Option+Enter to quickly compile your code. You can click on the “Compiled in …” text to see the compiled code.

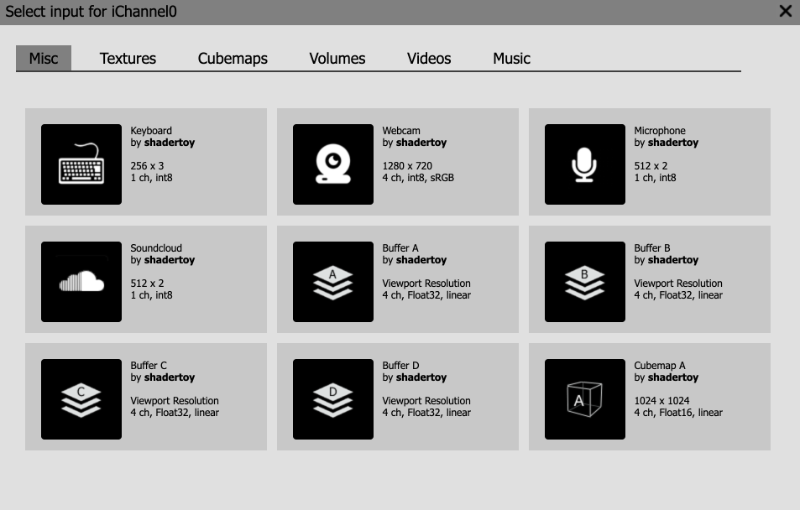

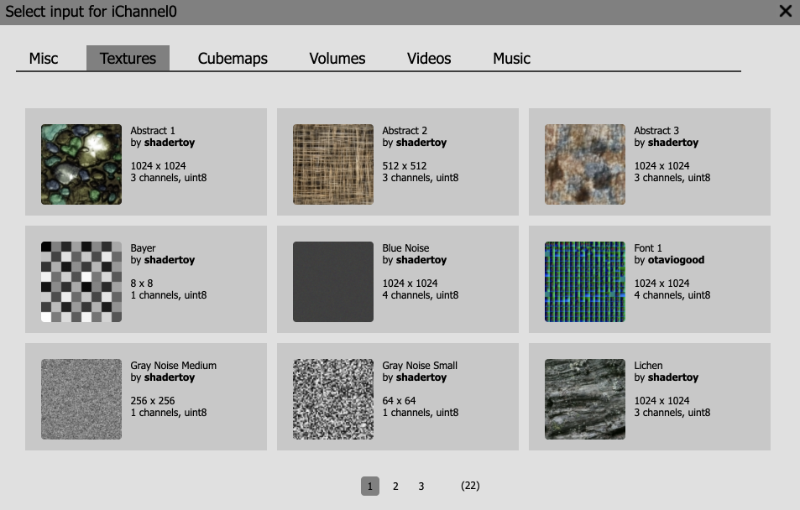

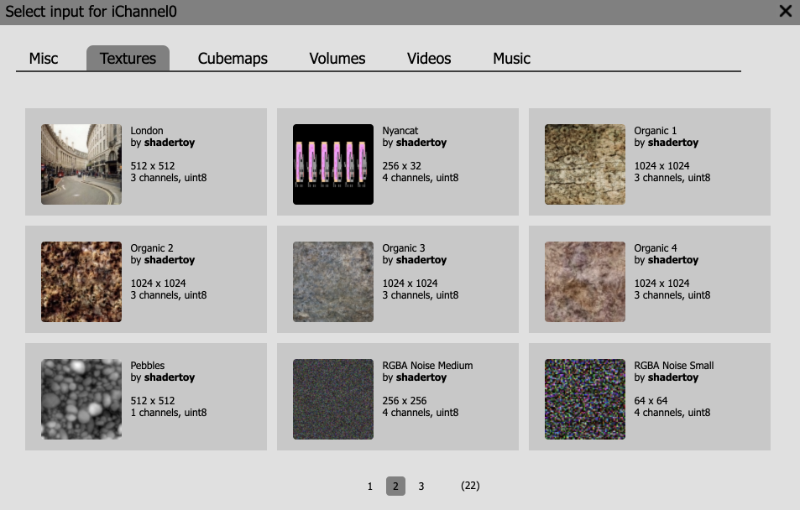

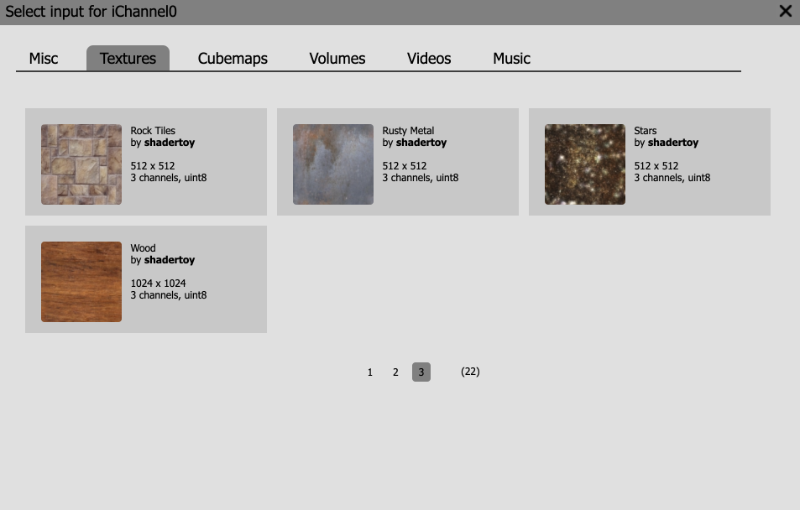

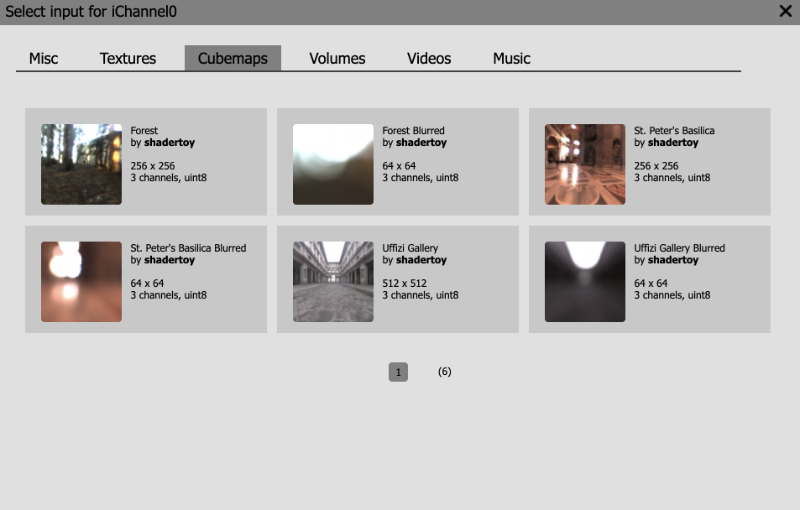

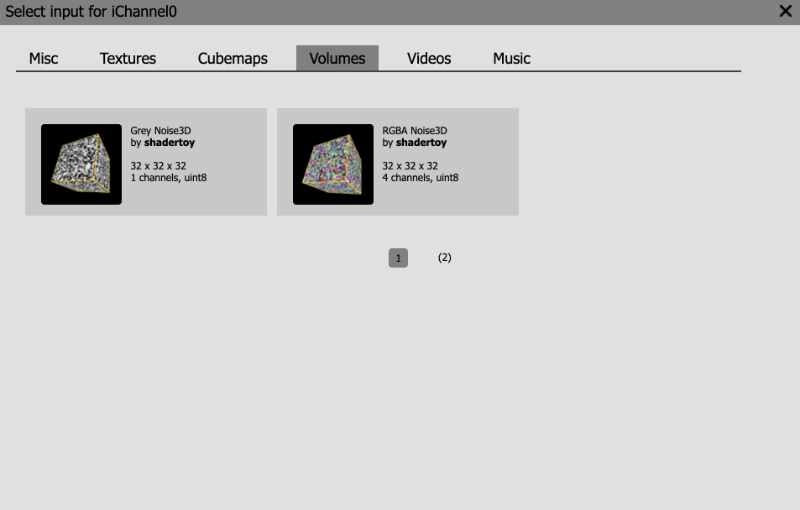

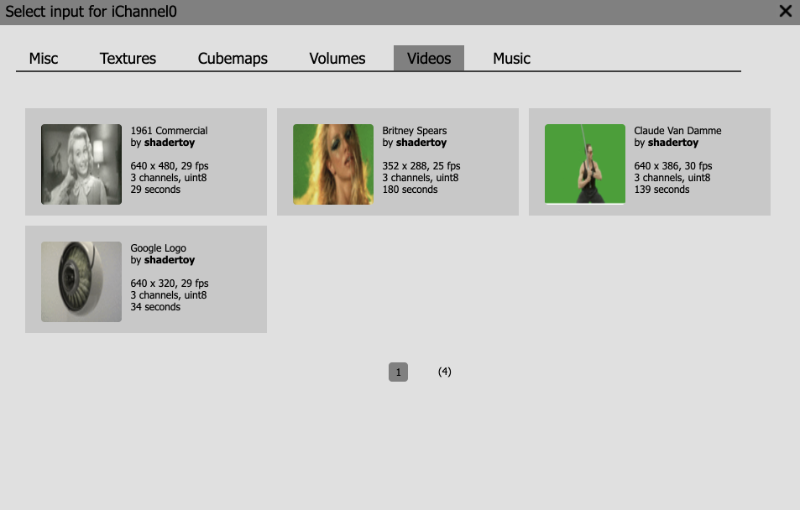

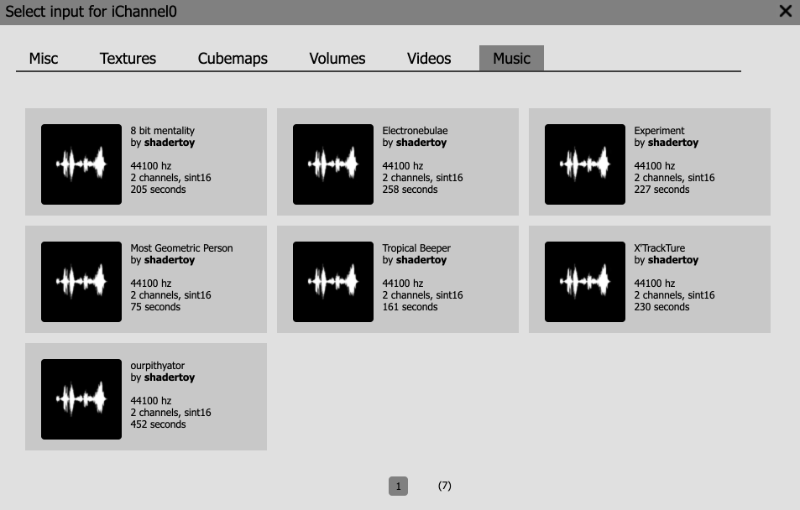

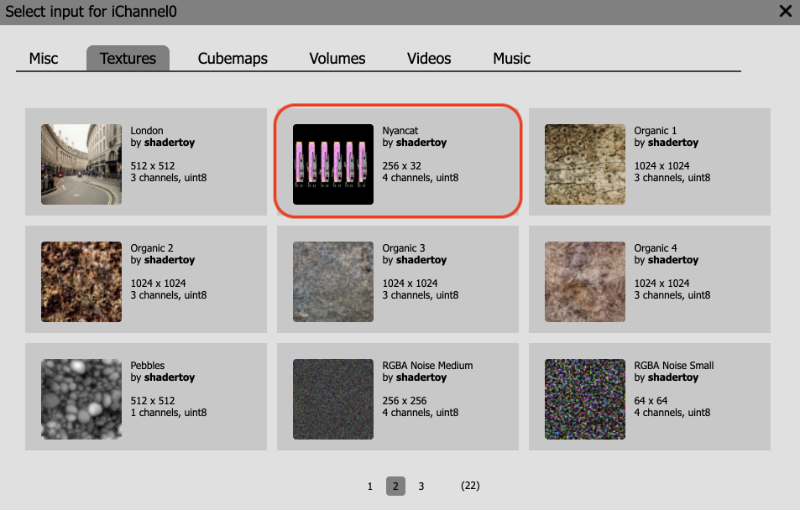

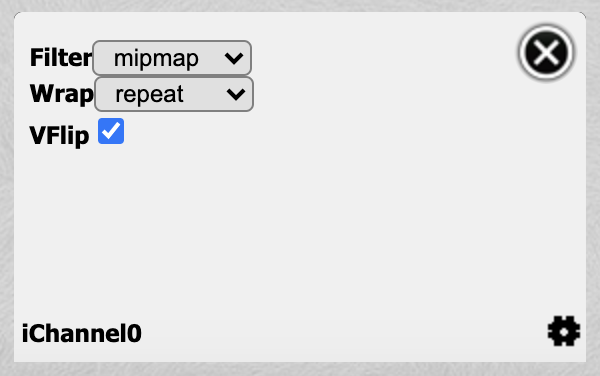

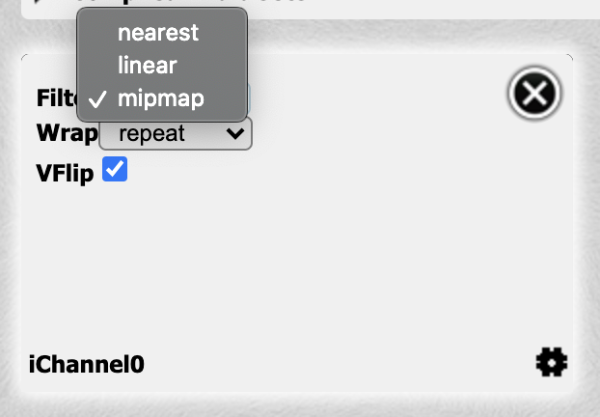

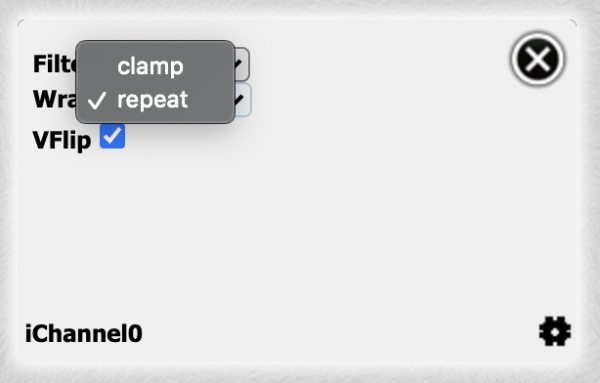

- Shadertoy provides four channels that can be accessed in your code through global variables such as “iChannel0”, “iChannel1”, etc. If you click on one of the channels, you can add textures or interactivity to your shader in the form of keyboard, webcam, audio, and more.

- Shadertoy gives you the option to adjust the size of your text in the code window. If you click the question mark, you can see information about the compiler being used to run your code. You can also see what functions or inputs were added by Shadertoy.

Shadertoy provides a nice environment to write GLSL code, but keep in mind that it injects variables, functions, and other utilities that may make it slightly different from GLSL code you may write in other environments. Shadertoy provides these as a convenience to you as you’re developing your shader. For example, the variable, “iTime”, is a global variable given to you to access the time (in seconds) that has passed since the page loaded.

Understanding Shader Code

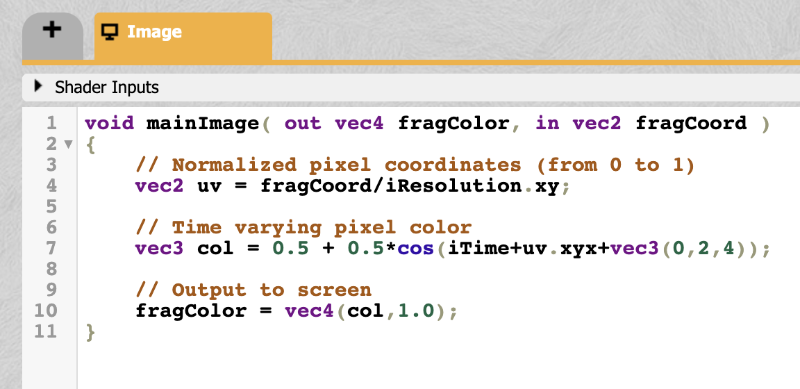

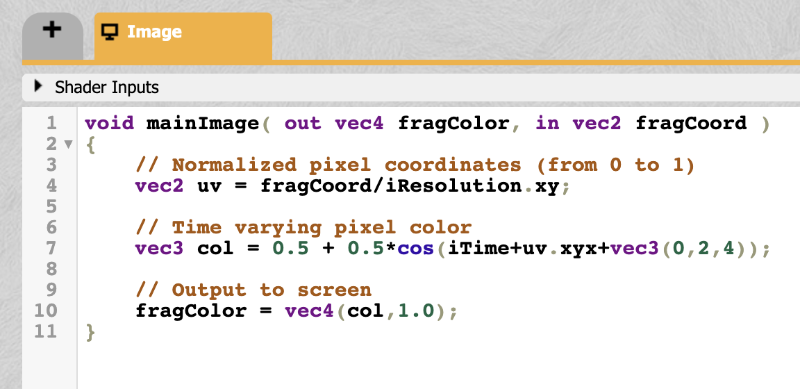

When you first start a new shader in Shadertoy, you will find the following code:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 // Normalized pixel coordinates (from 0 to 1)

4 vec2 uv = fragCoord/iResolution.xy;

5

6 vec3 col = 0.5 + 0.5*cos(iTime+uv.xyx+vec3(0,2,4));

7

8 // Output to screen

9 fragColor = vec4(col,1.0);

10}You can run the code by pressing the small arrow as mentioned in section 8 in the image above or you by pressing Alt+Center or Option+Enter as a keyboard shortcut.

If you’ve never worked with shaders before, that’s okay! I’ll try my best to explain the GLSL syntax you use to write shaders in Shadertoy. Right away, you will notice that this is a statically typed language like C, C++, Java, and C#. GLSL uses the concept of types too. Some of these types include: bool (boolean), int (integer), float (decimal), and vec (vector). GLSL also requires semicolons to be placed at the end of each line. Otherwise, the compiler will throw an error.

In the code snippet above, we are defining a mainImage function that must be present in our Shadertoy shader. It returns nothing, so the return type is void. It accepts two parameters: fragColor and fragCoord.

You may be scratching your head at the in and out. For Shadertoy, you generally have to worry about these keywords inside the mainImage function only. Remember how I said that the shaders allow us to write programs for the GPU rendering pipeline? Think of the in and out as the input and output. Shadertoy gives us an input, and we are writing a color as the output.

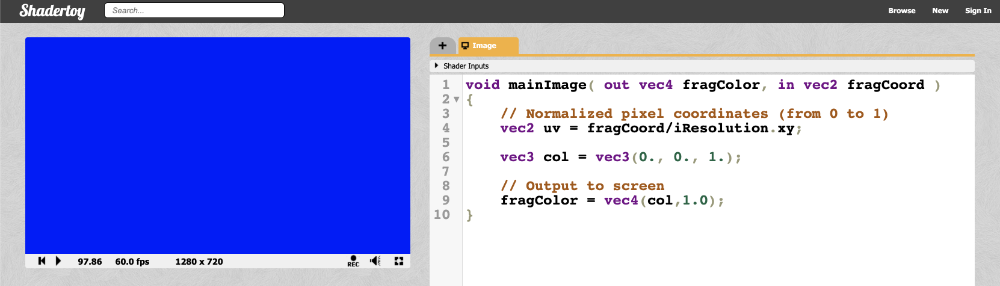

Before we continue, let’s change the code to something a bit simpler:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 // Normalized pixel coordinates (from 0 to 1)

4 vec2 uv = fragCoord/iResolution.xy;

5

6 vec3 col = vec3(0., 0., 1.); // RGB values

7

8 // Output to screen

9 fragColor = vec4(col,1.0);

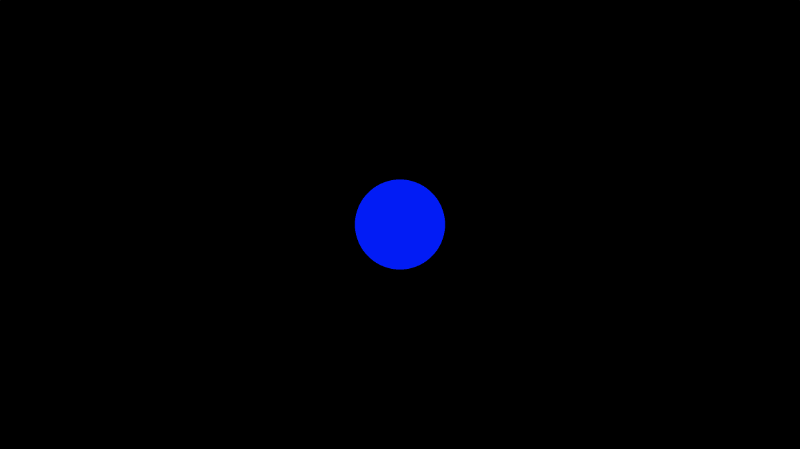

10}When we run the shader program, we should end up with a completely blue canvas. The shader program runs for every pixel on the canvas IN PARALLEL. This is extremely important to keep in mind. You have to think about how to write code that will change the color of the pixel depending on the pixel coordinate. It turns out we can create amazing pieces of artwork with just the pixel coordinates!

In shaders, we specify RGB (red, green, blue) values using a range between zero and one. If you have color values that are between 0 and 255, you can normalize them by dividing by 255.

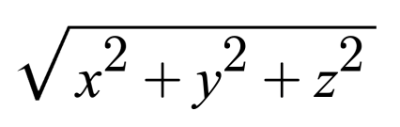

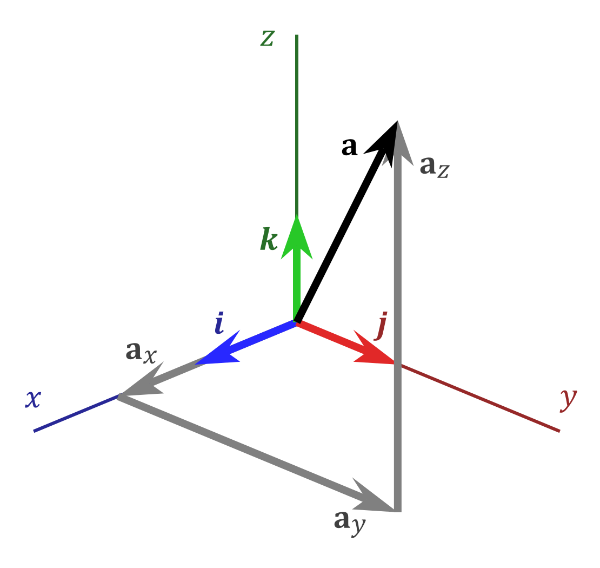

So we’ve seen how to change the color of the canvas, but what’s going on inside our shader program? The first line inside the mainImage function declares a variable called uv that is of type vec2. If you remember your vector arithmetic in school, this means we have a vector with an “x” component and a “y” component. A variable with the type, vec3, would have an additional “z” component.

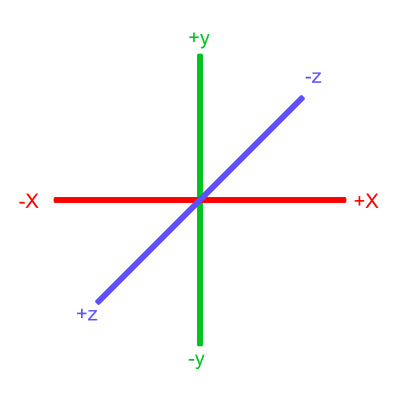

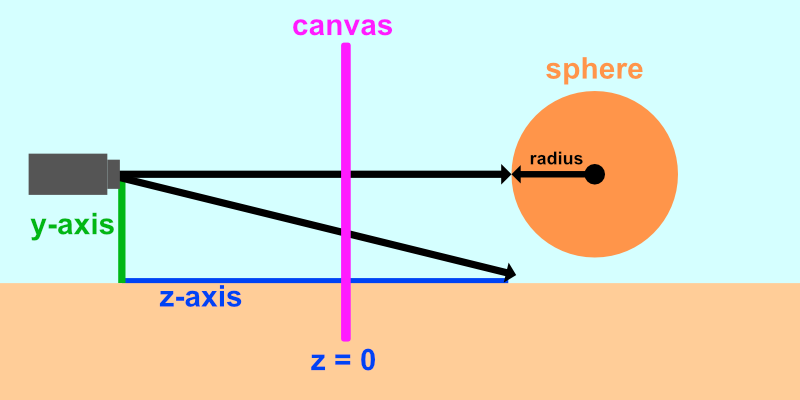

You may have learned in school about the 3D coordinate system. It lets us graph 3D coordinates on pieces of paper or some other flat surface. Obviously, visualizing 3D on a 2D surface is a bit difficult, so brilliant mathematicians of old created a 3D coordinate system to help us visualize points in 3D space.

However, you should think of vectors in shader code as “arrays” that can hold between one and four values. Sometimes, vectors can hold information about the XYZ coordinates in 3D space or they can contain information about RGB values. Therefore, the following are equivalent in shader programs:

color.r = color.x

color.g = color.y

color.b = color.z

color.a = color.wYes, there can be variables with the type, vec4, and the letter, w or a, is used to represent a fourth value. The a stands for “alpha”, since colors can have an alpha channel as well as the normal RGB values. I guess they chose w because it’s before x in the alphabet, and they already reached the last letter 🤷.

The uv variable doesn’t really represent an acronym for anything. It refers to the topic of UV Mapping that is commonly used to map pieces of a texture (such as an image) on 3D objects. The concept of UV mapping is more applicable to environments that give you access to a vertex shader unlike Shadertoy, but you can still leverage texture data in Shadertoy.

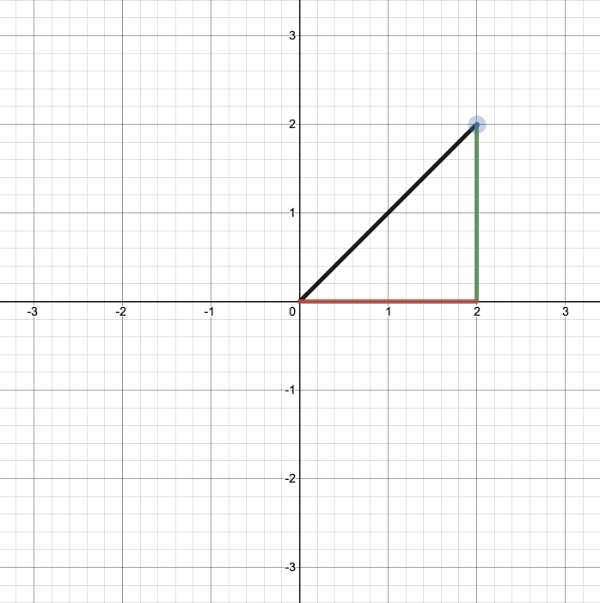

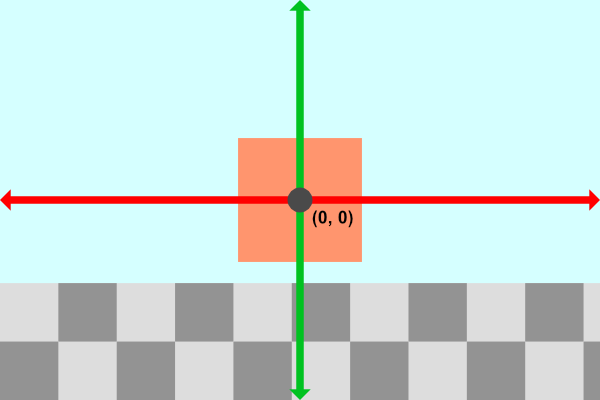

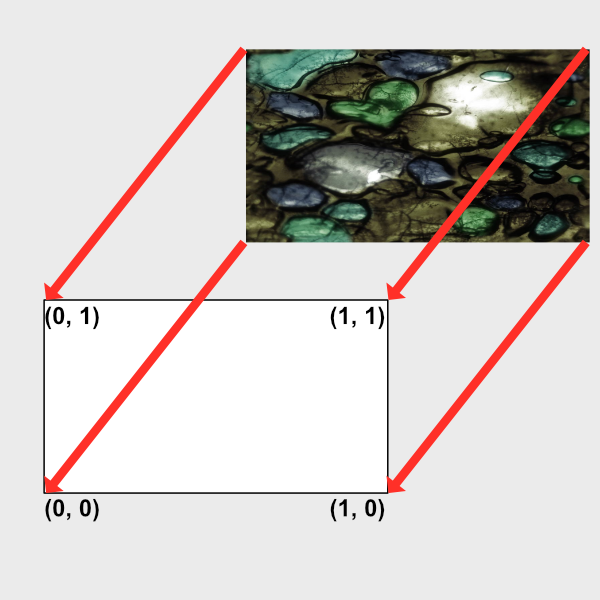

The fragCoord variable represents the XY coordinate of the canvas. The bottom-left corner starts at (0, 0) and the top-right corner is (iResolution.x, iResolution.y). By dividing fragCoord by iResolution.xy, we are able to normalize the pixel coordinates between zero and one.

Notice that we can perform arithmetic quite easily between two variables that are the same type, even if they are vectors. It’s the same as performing operations on the individual components:

1uv = fragCoord/iResolution.xy

2

3// The above is the same as:

4uv.x = fragCoord.x/iResolution.x

5uv.y = fragCoord.y/iResolution.yWhen we say something like iResolution.xy, the .xy portion refers to only the XY component of the vector. This lets us strip off only the components of the vector we care about even if iResolution happens to be of type vec3.

According to this Stack Overflow post, the z-component represents the pixel aspect ratio, which is usually 1.0. A value of one means your display has square pixels. You typically won’t see people using the z-component of iResolution that often, if at all.

We can also perform shortcuts when defining vectors. The following code snippet below will set the color of the entire canvas to black.

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 // Normalized pixel coordinates (from 0 to 1)

4 vec2 uv = fragCoord/iResolution.xy;

5

6 vec3 col = vec3(0); // Same as vec3(0, 0, 0)

7

8 // Output to screen

9 fragColor = vec4(col,1.0);

10}When we define a vector, the shader code is smart enough to apply the same value across all values of the vector if you only specify one value. Therefore vec3(0) gets expanded to vec3(0,0,0).

TIP

If you try to use values less than zero as the output fragment color, it will be clamped to zero. Likewise, any values greater than one will be clamped to one. This only applies to color values in the final fragment color.

It’s important to keep in mind that debugging in Shadertoy and in most shader environments, in general, is mostly visual. You don’t have anything like console.log to come to your rescue. You have to use color to help you debug.

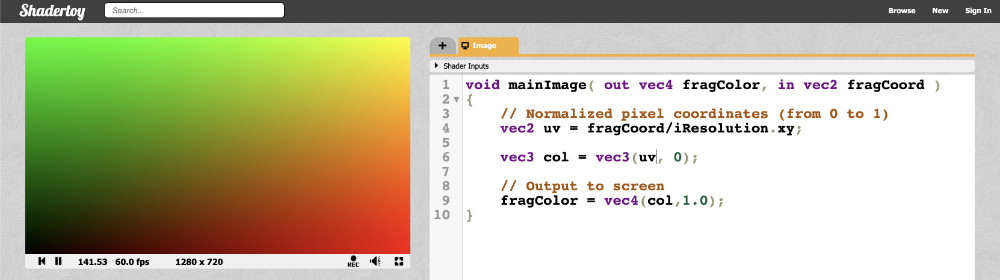

Let’s try visualizing the pixel coordinates on the screen with the following code:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 // Normalized pixel coordinates (from 0 to 1)

4 vec2 uv = fragCoord/iResolution.xy;

5

6 vec3 col = vec3(uv, 0); // This is the same as vec3(uv.x, uv.y, 0)

7

8 // Output to screen

9 fragColor = vec4(col,1.0);

10}We should end up with a canvas that is a mixture of black, red, green, and yellow.

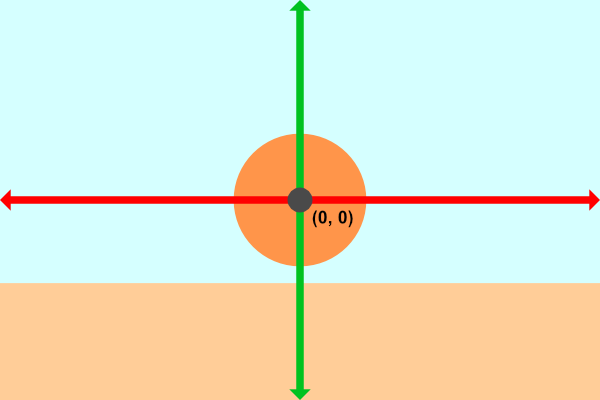

This looks pretty, but how does it help us? The uv variable represents the normalized canvas coordinates between zero and one on both the x-axis and the y-axis. The bottom-left corner of the canvas has the coordinate (0, 0). The top-right corner of the canvas has the coordinate (1, 1).

Inside the col variable, we are setting it equal to (uv.x, uv.y, 0), which means we shouldn’t expect any blue color in the canvas. When uv.x and uv.y equal zero, then we get black. When they are both equal to one, then we get yellow because in computer graphics, yellow is a combination of red and green values. The top-left corner of the canvas is (0, 1), which would mean the col variable would be equal to (0, 1, 0) which is the color green. The bottom-right corner has the coordinate of (1, 0), which means col equals (1, 0, 0) which is the color red.

Let the colors guide you in your debugging process!

Conclusion

Phew! I covered quite a lot about shaders and Shadertoy in this article. I hope you’re still with me! When I was learning shaders for the first time, it was like entering a completely new realm of programming. It’s completely different from what I’m used to, but it’s exciting and challenging! In the next series of posts, I’ll discuss how we can create shapes on the canvas and make animations!

Resources

Tutorial Part 2 - Circles and Animation

转自:https://inspirnathan.com/posts/48-shadertoy-tutorial-part-2

Greetings, friends! Today, we’ll talk about how to draw and animate a circle in a pixel shader using Shadertoy.

Practice

Before we draw our first 2D shape, let’s practice a bit more with Shadertoy. Create a new shader and replace the starting code with the following:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 vec2 uv = fragCoord/iResolution.xy; // <0,1>

4

5 vec3 col = vec3(0); // start with black

6

7 if (uv.x > .5) col = vec3(1); // make the right half of the canvas white

8

9 // Output to screen

10 fragColor = vec4(col,1.0);

11}Since our shader is run in parallel across all pixels, we have to rely on if statements to draw pixels different colors depending on their location on the screen. Depending on your graphics card and the compiler being used for your shader code, it might be more performant to use built-in functions such as step.

Let’s look at the same example but use the step function instead:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 vec2 uv = fragCoord/iResolution.xy; // <0,1>

4

5 vec3 col = vec3(0); // start with black

6

7 col = vec3(step(0.5, uv.x)); // make the right half of the canvas white

8

9 // Output to screen

10 fragColor = vec4(col,1.0);

11}The left half of the canvas will be black and the right half of the canvas will be white.

The step function accepts two inputs: the edge of the step function, and a value used to generate the step function. If the second parameter in the function argument is greater than the first, then return a value of one. Otherwise, return a value of zero.

You can perform the step function across each component in a vector as well:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 vec2 uv = fragCoord/iResolution.xy; // <0,1>

4

5 vec3 col = vec3(0); // start with black

6

7 col = vec3(step(0.5, uv), 0); // perform step function across the x-component and y-component of uv

8

9 // Output to screen

10 fragColor = vec4(col,1.0);

11}Since the step function operates on both the X component and Y component of the canvas, you should see the canvas get split into four colors.

How to Draw Circles

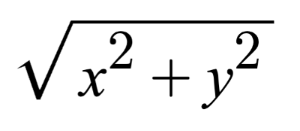

The equation of a circle is defined by the following:

x^2 + y^2 = r^2

x = x-coordinate on graph

y = y-coordinate on graph

r = radius of circleWe can re-arrange the variables to make the equation equal to zero:

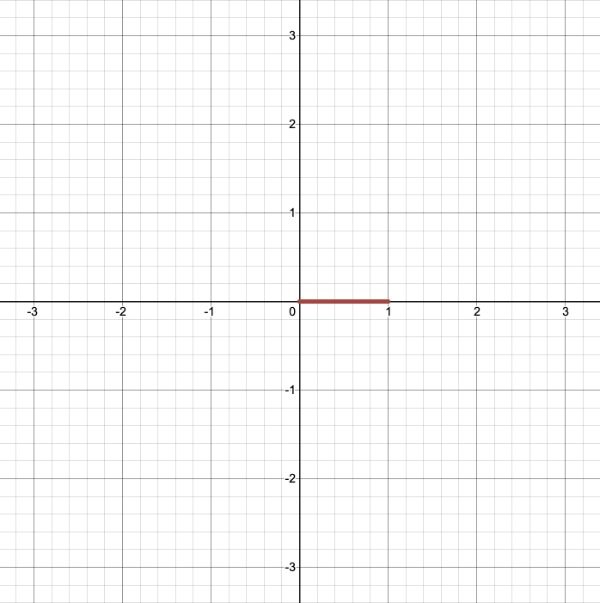

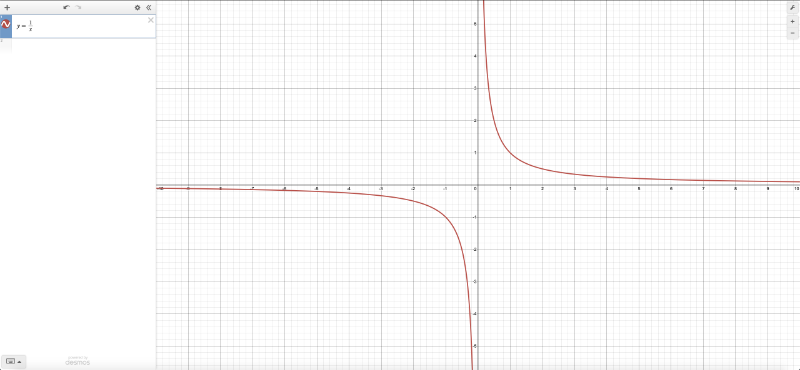

x^2 + y^2 - r^2 = 0To visualize this on a graph, you can use the Desmos calculator to graph the following:

x^2 + y^2 - 4 = 0If you copy the above snippet and paste it into the Desmos calculator, then you should see a graph of a circle with a radius of two. The center of the circle is located at the coordinate, (0, 0).

In Shadertoy, we can use the left-hand side (LHS) of this equation to make a circle. Let’s create a function called sdfCircle that returns the color, white, for each pixel at an XY-coordinate such that the equation is greater than zero and the color, blue, otherwise.

The sdf part of the function refers to a concept called signed distance functions (SDF), aka signed distance fields. It’s more common to use SDFs when drawing in 3D, but I will use this term for 2D shapes as well.

We will call our new function in the mainImage function to use it.

1vec3 sdfCircle(vec2 uv, float r) {

2 float x = uv.x;

3 float y = uv.y;

4

5 float d = length(vec2(x, y)) - r;

6

7 return d > 0. ? vec3(1.) : vec3(0., 0., 1.);

8}

9

10void mainImage( out vec4 fragColor, in vec2 fragCoord )

11{

12 vec2 uv = fragCoord/iResolution.xy; // <0,1>

13

14 vec3 col = sdfCircle(uv, .2); // Call this function on each pixel to check if the coordinate lies inside or outside of the circle

15

16 // Output to screen

17 fragColor = vec4(col,1.0);

18}If you’re wondering why I use 0. instead of simply 0 without a decimal, it’s because adding a decimal at the end of an integer will make it make it have a type of float instead of int. When you’re using functions that require numbers that are of type float, placing a decimal at the end of an integer is the easiest way to satisfy the compiler.

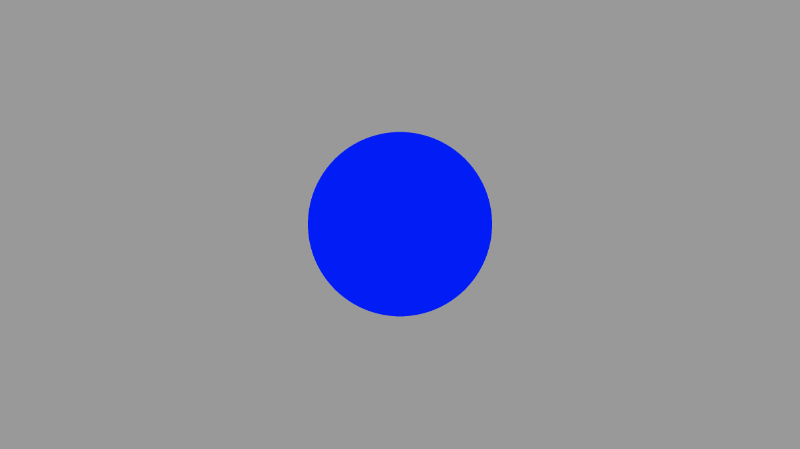

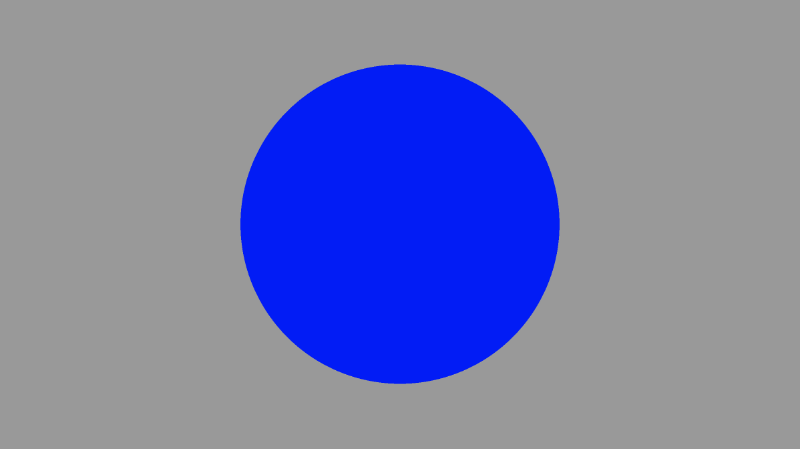

We’re using a radius of 0.2 because our coordinate system is set up to only have UV values that are between zero and one. When you run the code, you’ll notice that something appears wrong.

There seems to be a quarter of a blue dot in the bottom-left corner of the canvas. Why? Because our coordinate system is currently setup such that the origin is at the bottom-left corner. We need to shift every value by 0.5 to get the origin of the coordinate system at the center of the canvas.

Subtract 0.5 from the UV coordinates:

1vec2 uv = fragCoord/iResolution.xy; // <0,1>

2uv -= 0.5; // <-0.5, 0.5>Now the range is between -0.5 and 0.5 on both the x-axis and y-axis, which means the origin of the coordinate system is in the center of the canvas. However, we face another issue…

Our circle appears a bit stretched, so it looks more like an ellipse. This is caused by the aspect ratio of the canvas. When the width and the height of the canvas don’t match, the circle appears stretched. We can fix this issue by multiplying the X component of the UV coordinates by the aspect ratio of the canvas.

1vec2 uv = fragCoord/iResolution.xy; // <0,1>

2uv -= 0.5; // <-0.5, 0.5>

3uv.x *= iResolution.x/iResolution.y; // fix aspect ratioThis means the X component no longer goes between -0.5 and 0.5. It will go between values proportional to the aspect ratio of your canvas which will be determined by the width of your browser or webpage (if you’re using something like Chrome DevTools to alter the width).

Your finished code should look like the following:

1vec3 sdfCircle(vec2 uv, float r) {

2 float x = uv.x;

3 float y = uv.y;

4

5 float d = length(vec2(x, y)) - r;

6

7 return d > 0. ? vec3(1.) : vec3(0., 0., 1.);

8}

9

10void mainImage( out vec4 fragColor, in vec2 fragCoord )

11{

12 vec2 uv = fragCoord/iResolution.xy; // <0,1>

13 uv -= 0.5;

14 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

15

16 vec3 col = sdfCircle(uv, .2);

17

18 // Output to screen

19 fragColor = vec4(col,1.0);

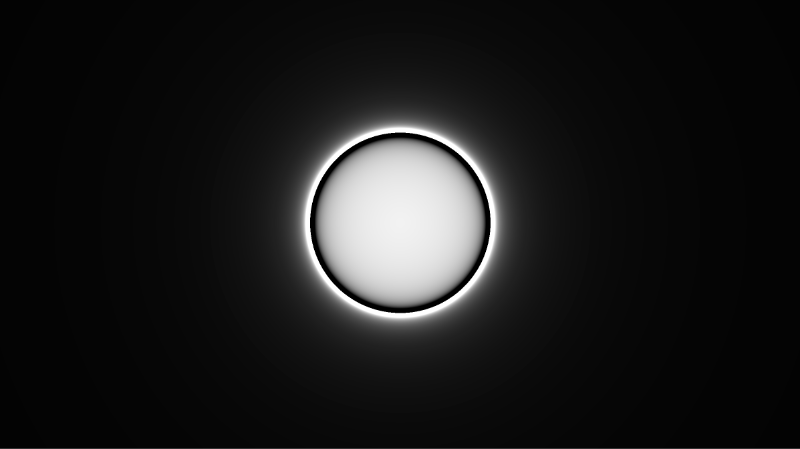

20}Once you run the code, you should see a perfectly proportional blue circle! 🎉

TIP

Please note that this is simply one way of coloring a circle. We will learn an alternative approach in Part 4 of this tutorial series. It will help us draw multiple shapes to the canvas.

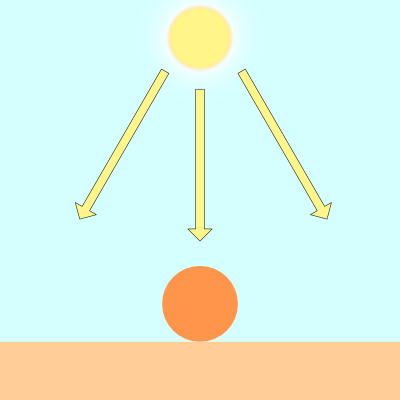

We can have some fun with this! We can use the global iTime variable to change colors over time. By using a cosine (cos) function, we can cycle through the same set of colors over and over. Since cosine functions oscillate between the values -1 and 1, we need to adjust this range to values between zero and one.

Remember, any color values in the final fragment color that are less than zero will automatically be clamped to zero. Likewise, any color values greater than one will be clamped to one. By adjusting the range, we get a wider range of colors.

1vec3 sdfCircle(vec2 uv, float r) {

2 float x = uv.x;

3 float y = uv.y;

4

5 float d = length(vec2(x, y)) - r;

6

7 return d > 0. ? vec3(0.) : 0.5 + 0.5 * cos(iTime + uv.xyx + vec3(0,2,4));

8}

9

10void mainImage( out vec4 fragColor, in vec2 fragCoord )

11{

12 vec2 uv = fragCoord/iResolution.xy; // <0,1>

13 uv -= 0.5;

14 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

15

16 vec3 col = sdfCircle(uv, .2);

17

18 // Output to screen

19 fragColor = vec4(col,1.0);

20}Once you run the code, you should see the circle change between various colors.

You might be confused by the syntax in uv.xyx. This is called Swizzling. We can create new vectors using components of a variable. Let’s look at an example.

1vec3 col = vec3(0.2, 0.4, 0.6);

2vec3 col2 = col.xyx;

3vec3 col3 = vec3(0.2, 0.4, 0.2);In the code snippet above, col2 and col3 are identical.

Moving the Circle

To move the circle, we need to apply an offset to the XY coordinates inside the equation for a circle. Therefore, our equation will look like the following:

1(x - offsetX)^2 + (y - offsetY)^2 - r^2 = 0

2

3x = x-coordinate on graph

4y = y-coordinate on graph

5r = radius of circle

6offsetX = how much to move the center of the circle in the x-axis

7offsetY = how much to move the center of the circle in the y-axisYou can experiment in the Desmos calculator again by copying and pasting the following code:

(x - 2)^2 + (y - 2)^2 - 4 = 0Inside Shadertoy, we can adjust our sdfCircle function to allow offsets and then move the center of the circle by 0.2.

1vec3 sdfCircle(vec2 uv, float r, vec2 offset) {

2 float x = uv.x - offset.x;

3 float y = uv.y - offset.y;

4

5 float d = length(vec2(x, y)) - r;

6

7 return d > 0. ? vec3(1.) : vec3(0., 0., 1.);

8}

9

10void mainImage( out vec4 fragColor, in vec2 fragCoord )

11{

12 vec2 uv = fragCoord/iResolution.xy; // <0,1>

13 uv -= 0.5;

14 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

15

16 vec2 offset = vec2(0.2, 0.2); // move the circle 0.2 units to the right and 0.2 units up

17

18 vec3 col = sdfCircle(uv, .2, offset);

19

20 // Output to screen

21 fragColor = vec4(col,1.0);

22}You can again use the global iTime variable in certain places to give life to your canvas and animate your circle.

1vec3 sdfCircle(vec2 uv, float r, vec2 offset) {

2 float x = uv.x - offset.x;

3 float y = uv.y - offset.y;

4

5 float d = length(vec2(x, y)) - r;

6

7 return d > 0. ? vec3(1.) : vec3(0., 0., 1.);

8}

9

10void mainImage( out vec4 fragColor, in vec2 fragCoord )

11{

12 vec2 uv = fragCoord/iResolution.xy; // <0,1>

13 uv -= 0.5;

14 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

15

16 vec2 offset = vec2(sin(iTime*2.)*0.2, cos(iTime*2.)*0.2); // move the circle clockwise

17

18 vec3 col = sdfCircle(uv, .2, offset);

19

20 // Output to screen

21 fragColor = vec4(col,1.0);

22}The above code will move the circle along a circular path in the clockwise direction as if it’s rotating about the origin. By multiplying iTime by a value, you can speed up the animation. By multiplying the output of the sine or cosine function by a value, you can control how far the circle moves from the center of the canvas. You’ll use sine and cosine functions a lot with iTime because they create oscillation.

Conclusion

In this lesson, we learned how to fix the coordinate system of the canvas, draw a circle, and animate the circle along a circular path. Circles, circles, circles! 🔵

In the next lesson, I’ll show you how to draw a square to the screen. Then, we’ll learn how to rotate it!

Tutorial Part 3 - Squares and Rotation

转自:https://inspirnathan.com/posts/49-shadertoy-tutorial-part-3

Greetings, friends! In the previous article, we learned how to draw circles and animate them. In this tutorial, we will learn how to draw squares and rotate them using a rotation matrix.

How to Draw Squares

Drawing a square is very similar to drawing a circle except we will use a different equation. In fact, you can draw practically any 2D shape you want if you have an equation for it!

The equation of a square is defined by the following:

max(abs(x),abs(y)) = r

x = x-coordinate on graph

y = y-coordinate on graph

r = radius of squareWe can re-arrange the variables to make the equation equal to zero:

max(abs(x), abs(y)) - r = 0To visualize this on a graph, you can use the Desmos calculator to graph the following:

max(abs(x), abs(y)) - 2 = 0If you copy the above snippet and paste it into the Desmos calculator, then you should see a graph of a square with a radius of two. The center of the square is located at the origin, (0, 0).

You can also include an offset:

max(abs(x - offsetX), abs(y - offsetY)) - r = 0

offsetX = how much to move the center of the square in the x-axis

offsetY = how much to move the center of the square in the y-axisThe steps for drawing a square using a pixel shader is very similar to the previous tutorial where we created a circle. Instead, we’ll be creating a function specifically for a square.

1vec3 sdfSquare(vec2 uv, float size, vec2 offset) {

2 float x = uv.x - offset.x;

3 float y = uv.y - offset.y;

4 float d = max(abs(x), abs(y)) - size;

5

6 return d > 0. ? vec3(1.) : vec3(1., 0., 0.);

7}

8

9

10void mainImage( out vec4 fragColor, in vec2 fragCoord )

11{

12 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

13 uv -= 0.5; // <-0.5,0.5>

14 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

15

16 vec2 offset = vec2(0.0, 0.0);

17

18 vec3 col = sdfSquare(uv, 0.2, offset);

19

20 // Output to screen

21 fragColor = vec4(col,1.0);

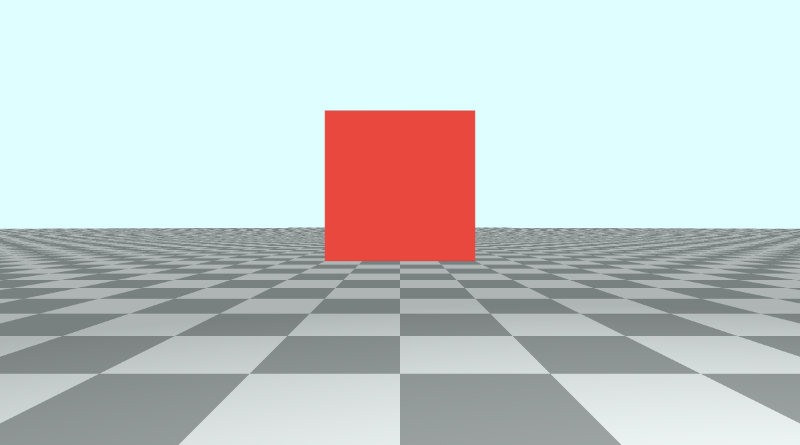

22}Yay! Now we have a red square! 🟥

Rotating shapes

You can rotate shapes by using a rotation matrix given by the following notation:

$$ R=\begin{bmatrix}\cos\theta&-\sin\theta\\sin\theta&\cos\theta\end{bmatrix} $$ Matrices can help us work with multiple linear equations and linear transformations. In fact, a rotational matrix is a type of transformation matrix. We can use matrices to perform other transformations such as shearing, translation, or reflection.

TIP

If you want to play around with matrix arithmetic, you can use either the Demos Matrix Calculator or WolframAlpha. If you need a refresher on matrices, you can watch this amazing video by Derek Banas on YouTube.

We can use a graph I created on Desmos to help visualize rotations. I have created a set of parametric equations that use the rotation matrix in its linear equation form.

The linear equation form is obtained by multiplying the rotation matrix by the vector [x,y] as calculated by WolframAlpha. The result is an equation for the transformed x-coordinate and transformed y-coordinate after the rotation.

In Shadertoy, we only care about the rotation matrix, not the linear equation form. I only discuss the linear equation form for the purpose of showing rotations in Desmos.

We can create a rotate function in our shader code that accepts UV coordinates and an angle by which to rotate the square. It will return the rotation matrix multiplied by the UV coordinates. Then, we’ll call the rotate function inside the sdfSquare function by passing in our XY coordinates, shifted by an offset (if it exists). We will use iTime as the angle, so that the square animates.

1vec2 rotate(vec2 uv, float th) {

2 return mat2(cos(th), sin(th), -sin(th), cos(th)) * uv;

3}

4

5vec3 sdfSquare(vec2 uv, float size, vec2 offset) {

6 float x = uv.x - offset.x;

7 float y = uv.y - offset.y;

8 vec2 rotated = rotate(vec2(x,y), iTime);

9 float d = max(abs(rotated.x), abs(rotated.y)) - size;

10

11 return d > 0. ? vec3(1.) : vec3(1., 0., 0.);

12}

13

14void mainImage( out vec4 fragColor, in vec2 fragCoord )

15{

16 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

17 uv -= 0.5; // <-0.5,0.5>

18 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

19

20 vec2 offset = vec2(0.0, 0.0);

21

22 vec3 col = sdfSquare(uv, 0.2, offset);

23

24 // Output to screen

25 fragColor = vec4(col,1.0);

26}Notice how we defined the matrix in Shadertoy. Let’s inspect the rotate function more closely.

1vec2 rotate(vec2 uv, float th) {

2 return mat2(cos(th), sin(th), -sin(th), cos(th)) * uv;

3}According to this wiki on GLSL, we define a matrix by comma-separated values, but we go through the matrix column-first. Since this is a matrix of type mat2, it is a 2x2 matrix. The first two values represent the first column, and the last two values represent the second-column. In tools such as WolframAlpha, you insert values row-first instead and use square brackets to separate each row. Keep this in mind as you’re experimenting with matrices.

Our rotate function returns a value that is of type vec2 because a 2x2 matrix (mat2) multiplied by a vec2 vector returns another vec2 vector.

When we run the code, we should see the square rotate in the clockwise direction.

Conclusion

In this lesson, we learned how to draw a square and rotate it using a transformation matrix. Using the knowledge you have gained from this tutorial and the previous one, you can draw any 2D shape you want using an equation or SDF for that shape!

In the next article, I’ll discuss how to draw multiple shapes on the canvas while being able to change the background color as well.

Resources

- 2D Rotation Example

- Vector and Matrix Operations in GLSL

- Vector and Matrix Transformations

- Matrix Arithmetic

- 2D SDFs

Tutorial Part 4 - Multiple 2D Shapes and Mixing

转自:https://inspirnathan.com/posts/50-shadertoy-tutorial-part-4

UPDATE

This article has been revamped as of May 3, 2021. I replaced most of the code snippets with a cleaner solution for drawing 2D shapes.

Greetings, friends! In the past couple tutorials, we’ve learned how to draw 2D shapes to the canvas using Shadertoy. In this article, I’d like to discuss a better approach to drawing 2D shapes, so we can easily add multiple shapes to the canvas. We’ll also learn how to change the background color independent from the shape colors.

The Mix Function

Before we continue, let’s take a look at the mix function. This function will be especially useful to us as we render multiple 2D shapes to the scene.

The mix function linearly interpolates between two values. In other shader languages such as HLSL, this function is known as lerp instead.

Linear interpolation for the function, mix(x, y, a), is based on the following formula:

x * (1 - a) + y * a

x = first value

y = second value

a = value that linearly interpolates between x and yThink of the third parameter, a, as a slider that lets you choose values between x and y.

You will see the mix function used heavily in shaders. It’s a great way to create color gradients. Let’s look at an example:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

4

5 float interpolatedValue = mix(0., 1., uv.x);

6 vec3 col = vec3(interpolatedValue);

7

8 // Output to screen

9 fragColor = vec4(col,1.0);

10}In the above code, we are using the mix function to get an interpolated value per pixel on the screen across the x-axis. By using the same value across the red, green, and blue channels, we get a gradient that goes from black to white, with shades of gray in between.

We can also use the mix function along the y-axis:

1float interpolatedValue = mix(0., 1., uv.y);Using this knowledge, we can create a colored gradient in our pixel shader. Let’s define a function specifically for setting the background color of the canvas.

1vec3 getBackgroundColor(vec2 uv) {

2 uv += 0.5; // remap uv from <-0.5,0.5> to <0,1>

3 vec3 gradientStartColor = vec3(1., 0., 1.);

4 vec3 gradientEndColor = vec3(0., 1., 1.);

5 return mix(gradientStartColor, gradientEndColor, uv.y); // gradient goes from bottom to top

6}

7

8void mainImage( out vec4 fragColor, in vec2 fragCoord )

9{

10 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

11 uv -= 0.5; // <-0.5,0.5>

12 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

13

14 vec3 col = getBackgroundColor(uv);

15

16 // Output to screen

17 fragColor = vec4(col,1.0);

18}This will produce a cool gradient that goes between shades of purple and cyan.

When using the mix function on vectors, it will use the third parameter to interpolate each vector on a component basis. It will run through the interpolator function for the red component (or x-component) of the gradientStartColor vector and the red component of the gradientEndColor vector. The same tactic will be applied to the green (y-component) and blue (z-component) channels of each vector.

We added 0.5 to the value of uv because in most situations, we will be working with values of uv that range between a negative number and positive number. If we pass a negative value into the final fragColor, then it’ll be clamped to zero. We shift the range away from negative values for the purpose of displaying color in the full range.

An Alternative Way to Draw 2D Shapes

In the previous tutorials, we learned how to use 2D SDFs to create 2D shapes such as circles and squares. However, the sdfCircle and sdfSquare functions were returning a color in the form of a vec3 vector.

Typically, SDFs return a float and not vec3 value. Remember, “SDF” is an acronym for “signed distance fields.” Therefore, we expect them to return a distance of type float. In 3D SDFs, this is usually true, but in 2D SDFs, I find it’s more useful to return either a one or zero depending on whether the pixel is inside the shape or outside the shape as we’ll see later.

The distance is relative to some point, typically the center of the shape. If a circle’s center is at the origin, (0, 0), then we know that any point on the edge of the circle is equal to the radius of the circle, hence the equation:

x^2 + y^2 = r^2

Or, when rearranged,

x^2 + y^2 - r^2 = 0

where x^2 + y^2 - r^2 = distance = dIf the distance is greater than zero, then we know that we are outside the circle. If the distance is less than zero, then we are inside the circle. If the distance is equal to zero exactly, then we’re on the edge of the circle. This is where the “signed” part of the “signed distance field” comes into play. The distance can be negative or positive depending on whether the pixel coordinate is inside or outside the shape.

In Part 2 of this tutorial series, we drew a blue circle using the following code:

1vec3 sdfCircle(vec2 uv, float r) {

2 float x = uv.x;

3 float y = uv.y;

4

5 float d = length(vec2(x, y)) - r;

6

7 return d > 0. ? vec3(1.) : vec3(0., 0., 1.);

8 // draw background color if outside the shape

9 // draw circle color if inside the shape

10}

11

12void mainImage( out vec4 fragColor, in vec2 fragCoord )

13{

14 vec2 uv = fragCoord/iResolution.xy; // <0,1>

15 uv -= 0.5;

16 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

17

18 vec3 col = sdfCircle(uv, .2);

19

20 // Output to screen

21 fragColor = vec4(col,1.0);

22}The problem with this approach is that we’re forced to draw a circle with the color, blue, and a background with the color, white.

We need to make the code a bit more abstract, so we can draw the background and shape colors independent of each other. This will allow us to draw multiple shapes to the scene and select any color we want for each shape and the background.

Let’s look at an alternative way of drawing the blue circle:

1float sdfCircle(vec2 uv, float r, vec2 offset) {

2 float x = uv.x - offset.x;

3 float y = uv.y - offset.y;

4

5 return length(vec2(x, y)) - r;

6}

7

8vec3 drawScene(vec2 uv) {

9 vec3 col = vec3(1);

10 float circle = sdfCircle(uv, 0.1, vec2(0, 0));

11

12 col = mix(vec3(0, 0, 1), col, step(0., circle));

13

14 return col;

15}

16

17void mainImage( out vec4 fragColor, in vec2 fragCoord )

18{

19 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

20 uv -= 0.5; // <-0.5,0.5>

21 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

22

23 vec3 col = drawScene(uv);

24

25 // Output to screen

26 fragColor = vec4(col,1.0);

27}In the code above, we are now abstracting out a few things. We have a drawScene function that will be responsible for rendering the scene, and the sdfCircle now returns a float that represents the “signed distance” between a pixel on the screen and a point on the circle.

We learned about the step function in Part 2. It returns a value of one or zero depending on the value of the second parameter. In fact, the following are equivalent:

1float result = step(0., circle);

2float result = circle > 0. ? 1. : 0.;If the “signed distance” value is greater than zero, then that means, the point is inside the circle. If the value is less than or equal to zero, then the point is outside or on the edge of the circle.

Inside the drawScene function, we are using the mix function to blend the white background color with the color, blue. The value of circle will determine if the pixel is white (the background) or blue (the circle). In this sense, we can use the mix function as a “toggle” that will switch between the shape color or background color depending on the value of the third parameter.

Using an SDF in this way basically lets us draw the shape only if the pixel is at a coordinate that lies within the shape. Otherwise, it should draw the color that was there before.

Let’s add a square that is offset from the center a bit.

1float sdfCircle(vec2 uv, float r, vec2 offset) {

2 float x = uv.x - offset.x;

3 float y = uv.y - offset.y;

4

5 return length(vec2(x, y)) - r;

6}

7

8float sdfSquare(vec2 uv, float size, vec2 offset) {

9 float x = uv.x - offset.x;

10 float y = uv.y - offset.y;

11

12 return max(abs(x), abs(y)) - size;

13}

14

15vec3 drawScene(vec2 uv) {

16 vec3 col = vec3(1);

17 float circle = sdfCircle(uv, 0.1, vec2(0, 0));

18 float square = sdfSquare(uv, 0.07, vec2(0.1, 0));

19

20 col = mix(vec3(0, 0, 1), col, step(0., circle));

21 col = mix(vec3(1, 0, 0), col, step(0., square));

22

23 return col;

24}

25

26void mainImage( out vec4 fragColor, in vec2 fragCoord )

27{

28 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

29 uv -= 0.5; // <-0.5,0.5>

30 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

31

32 vec3 col = drawScene(uv);

33

34 // Output to screen

35 fragColor = vec4(col,1.0);

36}Using the mix function with this approach lets us easily render multiple 2D shapes to the scene!

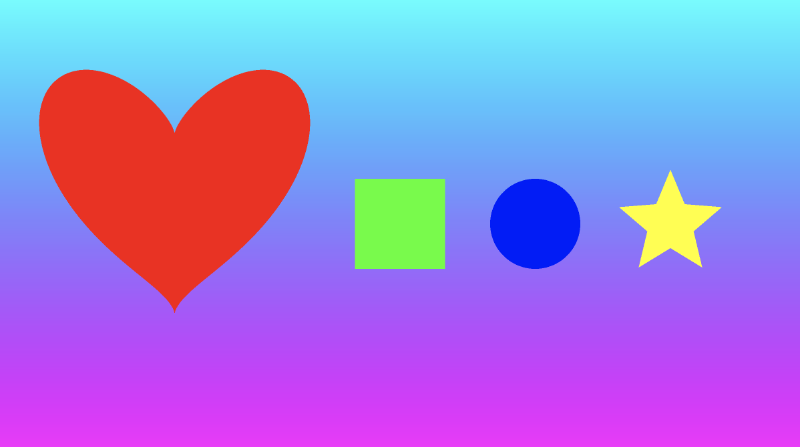

Custom Background and Multiple 2D Shapes

With the knowledge we’ve learned, we can easily customize our background while leaving the color of our shapes intact. Let’s add a function that returns a gradient color for the background and use it at the top of the drawScene function.

1vec3 getBackgroundColor(vec2 uv) {

2 uv += 0.5; // remap uv from <-0.5,0.5> to <0,1>

3 vec3 gradientStartColor = vec3(1., 0., 1.);

4 vec3 gradientEndColor = vec3(0., 1., 1.);

5 return mix(gradientStartColor, gradientEndColor, uv.y); // gradient goes from bottom to top

6}

7

8float sdfCircle(vec2 uv, float r, vec2 offset) {

9 float x = uv.x - offset.x;

10 float y = uv.y - offset.y;

11

12 return length(vec2(x, y)) - r;

13}

14

15float sdfSquare(vec2 uv, float size, vec2 offset) {

16 float x = uv.x - offset.x;

17 float y = uv.y - offset.y;

18 return max(abs(x), abs(y)) - size;

19}

20

21vec3 drawScene(vec2 uv) {

22 vec3 col = getBackgroundColor(uv);

23 float circle = sdfCircle(uv, 0.1, vec2(0, 0));

24 float square = sdfSquare(uv, 0.07, vec2(0.1, 0));

25

26 col = mix(vec3(0, 0, 1), col, step(0., circle));

27 col = mix(vec3(1, 0, 0), col, step(0., square));

28

29 return col;

30}

31

32void mainImage( out vec4 fragColor, in vec2 fragCoord )

33{

34 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

35 uv -= 0.5; // <-0.5,0.5>

36 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

37

38 vec3 col = drawScene(uv);

39

40 // Output to screen

41 fragColor = vec4(col,1.0);

42}Simply stunning! 🤩

Would this piece of abstract digital art make a lot of money as a non-fungible token 🤔. Probably not, but one can hope 😅.

Conclusion

In this lesson, we created a beautiful piece of digital art. We learned how to use the mix function to create a color gradient and how to use it to render shapes on top of each other or on top of a background layer. In the next lesson, I’ll talk about other 2D shapes we can draw such as hearts and stars.

Resources

Tutorial Part 5 - 2D SDF Operations and More 2D Shapes

转自:https://inspirnathan.com/posts/51-shadertoy-tutorial-part-5

UPDATE

This article has been heavily revamped as of May 3, 2021. I added a new section on 2D SDF operations, replaced all the code snippets with a cleaner solution for drawing 2D shapes, and added a section on Quadratic Bézier curves. Enjoy!

Greetings, friends! In this tutorial, I’ll discuss how to use 2D SDF operations to create more complex shapes from primitive shapes, and I’ll discuss how to draw more primitive 2D shapes, including hearts and stars. I’ll help you utilize this list of 2D SDFs that was popularized by the talented Inigo Quilez, one of the co-creators of Shadertoy. Let’s begin!

Combination 2D SDF Operations

In the previous tutorials, we’ve seen how to draw primitive 2D shapes such as circles and squares, but we can use 2D SDF operations to create more complex shapes by combining primitive shapes together.

Let’s start with some simple boilerplate code for 2D shapes:

1vec3 getBackgroundColor(vec2 uv) {

2 uv = uv * 0.5 + 0.5; // remap uv from <-0.5,0.5> to <0.25,0.75>

3 vec3 gradientStartColor = vec3(1., 0., 1.);

4 vec3 gradientEndColor = vec3(0., 1., 1.);

5 return mix(gradientStartColor, gradientEndColor, uv.y); // gradient goes from bottom to top

6}

7

8float sdCircle(vec2 uv, float r, vec2 offset) {

9 float x = uv.x - offset.x;

10 float y = uv.y - offset.y;

11

12 return length(vec2(x, y)) - r;

13}

14

15float sdSquare(vec2 uv, float size, vec2 offset) {

16 float x = uv.x - offset.x;

17 float y = uv.y - offset.y;

18

19 return max(abs(x), abs(y)) - size;

20}

21

22vec3 drawScene(vec2 uv) {

23 vec3 col = getBackgroundColor(uv);

24 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

25 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

26

27 float res; // result

28 res = d1;

29

30 res = step(0., res); // Same as res > 0. ? 1. : 0.;

31

32 col = mix(vec3(1,0,0), col, res);

33 return col;

34}

35

36void mainImage( out vec4 fragColor, in vec2 fragCoord )

37{

38 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

39 uv -= 0.5; // <-0.5,0.5>

40 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

41

42 vec3 col = drawScene(uv);

43

44 fragColor = vec4(col,1.0); // Output to screen

45}Please note how I’m now using sdCircle for the function name instead of sdfCircle (which was used in previous tutorials). Inigo Quilez’s website commonly uses sd in front of the shape name, but I was using sdf to help make it clear that these are signed distance fields (SDF).

When you run the code, you should see a red circle with a gradient background color, similar to what we learned in the previous tutorial.

Pay attention to where we use the mix function:

1col = mix(vec3(1,0,0), col, res);This line says to take the result and either pick the color red or the value of col (currently the background color) depending on the value of res (the result).

Now, let’s discuss the various SDF operations that can be performed. We will look at the interaction between a circle and a square.

Union: combine two shapes together.

1vec3 drawScene(vec2 uv) {

2 vec3 col = getBackgroundColor(uv);

3 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

4 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

5

6 float res; // result

7 res = min(d1, d2); // union

8

9 res = step(0., res); // Same as res > 0. ? 1. : 0.;

10

11 col = mix(vec3(1,0,0), col, res);

12 return col;

13}Intersection: take only the part where the two shapes intersect.

1vec3 drawScene(vec2 uv) {

2 vec3 col = getBackgroundColor(uv);

3 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

4 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

5

6 float res; // result

7 res = max(d1, d2); // intersection

8

9 res = step(0., res); // Same as res > 0. ? 1. : 0.;

10

11 col = mix(vec3(1,0,0), col, res);

12 return col;

13}Subtraction: subtract d1 from d2.

1vec3 drawScene(vec2 uv) {

2 vec3 col = getBackgroundColor(uv);

3 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

4 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

5

6 float res; // result

7 res = max(-d1, d2); // subtraction - subtract d1 from d2

8

9 res = step(0., res); // Same as res > 0. ? 1. : 0.;

10

11 col = mix(vec3(1,0,0), col, res);

12 return col;

13}Subtraction: subtract d2 from d1.

1vec3 drawScene(vec2 uv) {

2 vec3 col = getBackgroundColor(uv);

3 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

4 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

5

6 float res; // result

7 res = max(d1, -d2); // subtraction - subtract d2 from d1

8

9 res = step(0., res); // Same as res > 0. ? 1. : 0.;

10

11 col = mix(vec3(1,0,0), col, res);

12 return col;

13}XOR: an exclusive “OR” operation will take the parts of the two shapes that do not intersect with each other.

1vec3 drawScene(vec2 uv) {

2 vec3 col = getBackgroundColor(uv);

3 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

4 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

5

6 float res; // result

7 res = max(min(d1, d2), -max(d1, d2)); // xor

8

9 res = step(0., res); // Same as res > 0. ? 1. : 0.;

10

11 col = mix(vec3(1,0,0), col, res);

12 return col;

13}We can also create “smooth” 2D SDF operations that smoothly blend the edges around where the shapes meet. You’ll find these operations to be more applicable when I discuss 3D shapes, but they work in 2D too!

Add the following functions to the top of your code:

1// smooth min

2float smin(float a, float b, float k) {

3 float h = clamp(0.5+0.5*(b-a)/k, 0.0, 1.0);

4 return mix(b, a, h) - k*h*(1.0-h);

5}

6

7// smooth max

8float smax(float a, float b, float k) {

9 return -smin(-a, -b, k);

10}Smooth union: combine two shapes together, but smoothly blend the edges where they meet.

1vec3 drawScene(vec2 uv) {

2 vec3 col = getBackgroundColor(uv);

3 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

4 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

5

6 float res; // result

7 res = smin(d1, d2, 0.05); // smooth union

8

9 res = step(0., res); // Same as res > 0. ? 1. : 0.;

10

11 col = mix(vec3(1,0,0), col, res);

12 return col;

13}Smooth intersection: take only the two parts where the two shapes intersect, but smoothly blend the edges where they meet.

1vec3 drawScene(vec2 uv) {

2 vec3 col = getBackgroundColor(uv);

3 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

4 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

5

6 float res; // result

7 res = smax(d1, d2, 0.05); // smooth intersection

8

9 res = step(0., res); // Same as res > 0. ? 1. : 0.;

10

11 col = mix(vec3(1,0,0), col, res);

12 return col;

13}You can find the finished code below. Uncomment out the lines for any of the combination 2D SDF operations you want to see.

1// smooth min

2float smin(float a, float b, float k) {

3 float h = clamp(0.5+0.5*(b-a)/k, 0.0, 1.0);

4 return mix(b, a, h) - k*h*(1.0-h);

5}

6

7// smooth max

8float smax(float a, float b, float k) {

9 return -smin(-a, -b, k);

10}

11

12vec3 getBackgroundColor(vec2 uv) {

13 uv = uv * 0.5 + 0.5; // remap uv from <-0.5,0.5> to <0.25,0.75>

14 vec3 gradientStartColor = vec3(1., 0., 1.);

15 vec3 gradientEndColor = vec3(0., 1., 1.);

16 return mix(gradientStartColor, gradientEndColor, uv.y); // gradient goes from bottom to top

17}

18

19float sdCircle(vec2 uv, float r, vec2 offset) {

20 float x = uv.x - offset.x;

21 float y = uv.y - offset.y;

22

23 return length(vec2(x, y)) - r;

24}

25

26float sdSquare(vec2 uv, float size, vec2 offset) {

27 float x = uv.x - offset.x;

28 float y = uv.y - offset.y;

29

30 return max(abs(x), abs(y)) - size;

31}

32

33vec3 drawScene(vec2 uv) {

34 vec3 col = getBackgroundColor(uv);

35 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

36 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

37

38 float res; // result

39 res = d1;

40 //res = d2;

41 //res = min(d1, d2); // union

42 //res = max(d1, d2); // intersection

43 //res = max(-d1, d2); // subtraction - subtract d1 from d2

44 //res = max(d1, -d2); // subtraction - subtract d2 from d1

45 //res = max(min(d1, d2), -max(d1, d2)); // xor

46 //res = smin(d1, d2, 0.05); // smooth union

47 //res = smax(d1, d2, 0.05); // smooth intersection

48

49 res = step(0., res); // Same as res > 0. ? 1. : 0.;

50

51 col = mix(vec3(1,0,0), col, res);

52 return col;

53}

54

55void mainImage( out vec4 fragColor, in vec2 fragCoord )

56{

57 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

58 uv -= 0.5; // <-0.5,0.5>

59 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

60

61 vec3 col = drawScene(uv);

62

63 fragColor = vec4(col,1.0); // Output to screen

64}Positional 2D SDF Operations

Inigo Quilez’s 3D SDFs page describes a set of positional 3D SDF operations, but we can use these operations in 2D as well. I discuss 3D SDF operations later in Part 14. In this tutorial, I’ll go over positional 2D SDF operations that can help save us time and increase performance when drawing 2D shapes.

If you’re drawing a symmetrical scene, then it may be useful to use the opSymX operation. This operation will create a duplicate 2D shape along the x-axis using the SDF you provide. If we draw a circle at an offset of vec2(0.2, 0), then an equivalent circle will be drawn at vec2(-0.2, 0).

1float opSymX(vec2 p, float r)

2{

3 p.x = abs(p.x);

4 return sdCircle(p, r, vec2(0.2, 0));

5}

6

7vec3 drawScene(vec2 uv) {

8 vec3 col = getBackgroundColor(uv);

9

10 float res; // result

11 res = opSymX(uv, 0.1);

12

13 res = step(0., res);

14 col = mix(vec3(1,0,0), col, res);

15 return col;

16}We can also perform a similar operation along the y-axis. Using the opSymY operation, if we draw a circle at an offset of vec2(0, 0.2), then an equivalent circle will be drawn at vec2(0, -0.2).

1float opSymY(vec2 p, float r)

2{

3 p.y = abs(p.y);

4 return sdCircle(p, r, vec2(0, 0.2));

5}

6

7vec3 drawScene(vec2 uv) {

8 vec3 col = getBackgroundColor(uv);

9

10 float res; // result

11 res = opSymY(uv, 0.1);

12

13 res = step(0., res);

14 col = mix(vec3(1,0,0), col, res);

15 return col;

16}If you want to draw circles along two axes instead of just one, then you can use the opSymXY operation. This will create a duplicate along both the x-axis and y-axis, resulting in four circles. If we draw a circle with an offset of vec2(0.2, 0), then a circle will be drawn at vec2(0.2, 0.2), vec2(0.2, -0.2), vec2(-0.2, -0.2), and vec2(-0.2, 0.2).

1float opSymXY(vec2 p, float r)

2{

3 p = abs(p);

4 return sdCircle(p, r, vec2(0.2));

5}

6

7vec3 drawScene(vec2 uv) {

8 vec3 col = getBackgroundColor(uv);

9

10 float res; // result

11 res = opSymXY(uv, 0.1);

12

13 res = step(0., res);

14 col = mix(vec3(1,0,0), col, res);

15 return col;

16}Sometimes, you may want to create an infinite number of 2D objects across one or more axes. You can use the opRep operation to repeat circles along the axes of your choice. The parameter, c, is a vector used to control the spacing between the 2D objects along each axis.

1float opRep(vec2 p, float r, vec2 c)

2{

3 vec2 q = mod(p+0.5*c,c)-0.5*c;

4 return sdCircle(q, r, vec2(0));

5}

6

7vec3 drawScene(vec2 uv) {

8 vec3 col = getBackgroundColor(uv);

9

10 float res; // result

11 res = opRep(uv, 0.05, vec2(0.2, 0.2));

12

13 res = step(0., res);

14 col = mix(vec3(1,0,0), col, res);

15 return col;

16}If you want to repeat the 2D objects only a certain number of times instead of an infinite amount, you can use the opRepLim operation. The parameter, c, is now a float value and still controls the spacing between each repeated 2D object. The parameter, l, is a vector that lets you control how many times the shape should be repeated along a given axis. For example, a value of vec2(2, 2) would draw an extra circle along the positive and negative x-axis and y-axis.

1float opRepLim(vec2 p, float r, float c, vec2 l)

2{

3 vec2 q = p-c*clamp(round(p/c),-l,l);

4 return sdCircle(q, r, vec2(0));

5}

6

7vec3 drawScene(vec2 uv) {

8 vec3 col = getBackgroundColor(uv);

9

10 float res; // result

11 res = opRepLim(uv, 0.05, 0.15, vec2(2, 2));

12

13 res = step(0., res);

14 col = mix(vec3(1,0,0), col, res);

15 return col;

16}You can also perform deformations or distortions to an SDF by manipulating the value of p, the uv coordinate, and adding it to the value returned from an SDF. Inside the opDisplace operation, you can create any type of mathematical operation you want to displace the value of p and then add that result to the original value you get back from an SDF.

1float opDisplace(vec2 p, float r)

2{

3 float d1 = sdCircle(p, r, vec2(0));

4 float s = 0.5; // scaling factor

5

6 float d2 = sin(s * p.x * 1.8); // Some arbitrary values I played around with

7

8 return d1 + d2;

9}

10

11vec3 drawScene(vec2 uv) {

12 vec3 col = getBackgroundColor(uv);

13

14 float res; // result

15 res = opDisplace(uv, 0.1); // Kinda looks like an egg

16

17 res = step(0., res);

18 col = mix(vec3(1,0,0), col, res);

19 return col;

20}You can find the finished code below. Uncomment out the lines for any of the positional 2D SDF operations you want to see.

1vec3 getBackgroundColor(vec2 uv) {

2 uv = uv * 0.5 + 0.5; // remap uv from <-0.5,0.5> to <0.25,0.75>

3 vec3 gradientStartColor = vec3(1., 0., 1.);

4 vec3 gradientEndColor = vec3(0., 1., 1.);

5 return mix(gradientStartColor, gradientEndColor, uv.y); // gradient goes from bottom to top

6}

7

8float sdCircle(vec2 uv, float r, vec2 offset) {

9 float x = uv.x - offset.x;

10 float y = uv.y - offset.y;

11

12 return length(vec2(x, y)) - r;

13}

14

15float opSymX(vec2 p, float r)

16{

17 p.x = abs(p.x);

18 return sdCircle(p, r, vec2(0.2, 0));

19}

20

21float opSymY(vec2 p, float r)

22{

23 p.y = abs(p.y);

24 return sdCircle(p, r, vec2(0, 0.2));

25}

26

27float opSymXY(vec2 p, float r)

28{

29 p = abs(p);

30 return sdCircle(p, r, vec2(0.2));

31}

32

33float opRep(vec2 p, float r, vec2 c)

34{

35 vec2 q = mod(p+0.5*c,c)-0.5*c;

36 return sdCircle(q, r, vec2(0));

37}

38

39float opRepLim(vec2 p, float r, float c, vec2 l)

40{

41 vec2 q = p-c*clamp(round(p/c),-l,l);

42 return sdCircle(q, r, vec2(0));

43}

44

45float opDisplace(vec2 p, float r)

46{

47 float d1 = sdCircle(p, r, vec2(0));

48 float s = 0.5; // scaling factor

49

50 float d2 = sin(s * p.x * 1.8); // Some arbitrary values I played around with

51

52 return d1 + d2;

53}

54

55vec3 drawScene(vec2 uv) {

56 vec3 col = getBackgroundColor(uv);

57

58 float res; // result

59 res = opSymX(uv, 0.1);

60 //res = opSymY(uv, 0.1);

61 //res = opSymXY(uv, 0.1);

62 //res = opRep(uv, 0.05, vec2(0.2, 0.2));

63 //res = opRepLim(uv, 0.05, 0.15, vec2(2, 2));

64 //res = opDisplace(uv, 0.1);

65

66 res = step(0., res);

67 col = mix(vec3(1,0,0), col, res);

68 return col;

69}

70

71void mainImage( out vec4 fragColor, in vec2 fragCoord )

72{

73 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

74 uv -= 0.5; // <-0.5,0.5>

75 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

76

77 vec3 col = drawScene(uv);

78

79 fragColor = vec4(col,1.0); // Output to screen

80}Anti-aliasing

If you want to add any anti-aliasing, then you can use the smoothstep function to smooth out the edges of your shapes. The smoothstep(edge0, edge1, x) function accepts three parameters and performs a Hermite interpolation between zero and one when edge0 < x < edge1 .

1edge0: Specifies the value of the lower edge of the Hermite function.

2

3edge1: Specifies the value of the upper edge of the Hermite function.

4

5x: Specifies the source value for interpolation.

6

7t = clamp((x - edge0) / (edge1 - edge0), 0.0, 1.0);

8return t * t * (3.0 - 2.0 * t);TIP

The docs will say if

edge0is greater than or equal toedge1, then thesmoothstepfunction will return a value ofundefined. However, this is incorrect. The result of thesmoothstepfunction is still determined by the Hermite interpolation function even ifedge0is greater thanedge1.

If you’re still confused, this page from The Book of Shaders may help you visualize the smoothstep function. Essentially, it behaves like the step function with a few extra steps (no pun intended) 😂.

Let’s replace the step function with the smoothstep function to see how the result between a union of a circle and square behaves.

1vec3 getBackgroundColor(vec2 uv) {

2 uv = uv * 0.5 + 0.5; // remap uv from <-0.5,0.5> to <0.25,0.75>

3 vec3 gradientStartColor = vec3(1., 0., 1.);

4 vec3 gradientEndColor = vec3(0., 1., 1.);

5 return mix(gradientStartColor, gradientEndColor, uv.y); // gradient goes from bottom to top

6}

7

8float sdCircle(vec2 uv, float r, vec2 offset) {

9 float x = uv.x - offset.x;

10 float y = uv.y - offset.y;

11

12 return length(vec2(x, y)) - r;

13}

14

15float sdSquare(vec2 uv, float size, vec2 offset) {

16 float x = uv.x - offset.x;

17 float y = uv.y - offset.y;

18

19 return max(abs(x), abs(y)) - size;

20}

21

22vec3 drawScene(vec2 uv) {

23 vec3 col = getBackgroundColor(uv);

24 float d1 = sdCircle(uv, 0.1, vec2(0., 0.));

25 float d2 = sdSquare(uv, 0.1, vec2(0.1, 0));

26

27 float res; // result

28 res = min(d1, d2); // union

29

30 res = smoothstep(0., 0.02, res); // antialias entire result

31

32 col = mix(vec3(1,0,0), col, res);

33 return col;

34}

35

36void mainImage( out vec4 fragColor, in vec2 fragCoord )

37{

38 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

39 uv -= 0.5; // <-0.5,0.5>

40 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

41

42 vec3 col = drawScene(uv);

43

44 fragColor = vec4(col,1.0); // Output to screen

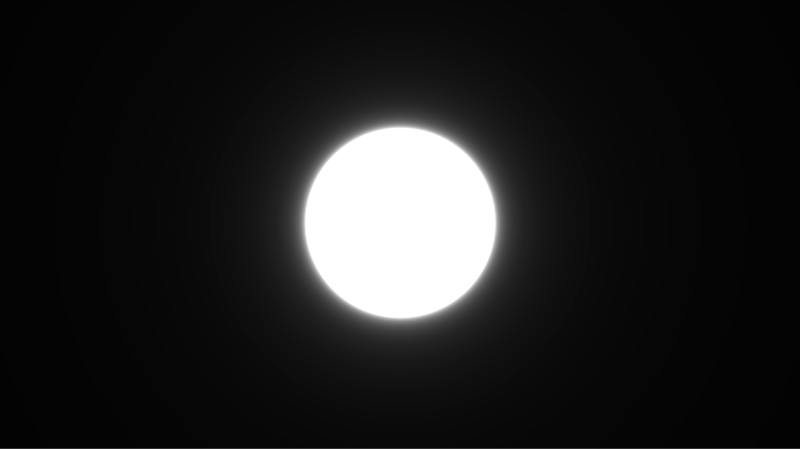

45}We end up with a shape that is slightly blurred around the edges.

The smoothstep function helps us create smooth transitions between colors, useful for implementing anti-aliasing. You may also see people use smoothstep to create emissive objects or neon glow effects. It is used very often in shaders.

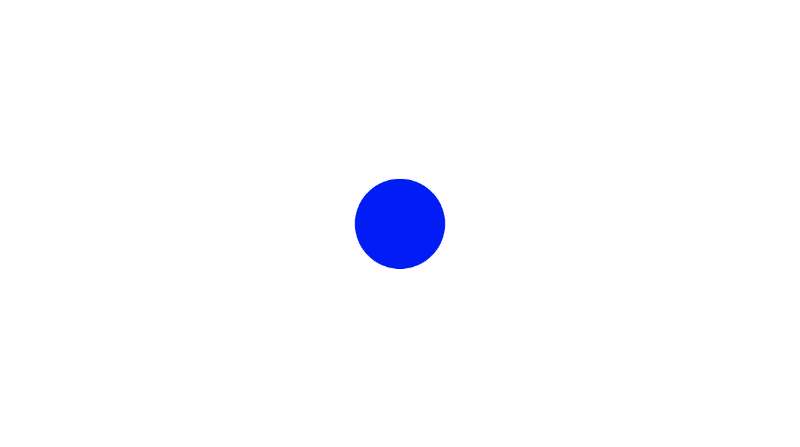

Drawing a Heart ❤️

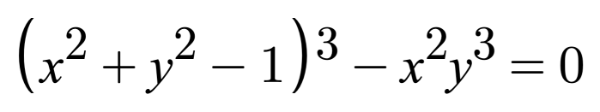

In this section, I’ll teach you how to draw a heart using Shadertoy. Keep in mind that there are multiple styles of hearts. I’ll show you how to create just one particular style of heart using an equation from Wolfram MathWorld.

If we want to apply an offset to this heart curve, then we need to subtract it from the x-component and y-component before applying any sort of operation (such as exponentiation) on them.

s = x - offsetX

t = y - offsetY

(s^2 + t^2 - 1)^3 - s^2 * t^3 = 0

x = x-coordinate on graph

y = y-coordinate on graphYou can play around with offsets on a heart curve using the graph I created on Desmos.

Now, how do we create an SDF for a heart in Shadertoy? We simply set the left-hand side (LHS) of the equation equal to the distance, d. Then, it’s the same process as we learned in Part 4.

1float sdHeart(vec2 uv, float size, vec2 offset) {

2 float x = uv.x - offset.x;

3 float y = uv.y - offset.y;

4 float xx = x * x;

5 float yy = y * y;

6 float yyy = yy * y;

7 float group = xx + yy - size;

8 float d = group * group * group - xx * yyy;

9

10 return d;

11}

12

13vec3 drawScene(vec2 uv) {

14 vec3 col = vec3(1);

15 float heart = sdHeart(uv, 0.04, vec2(0));

16

17 col = mix(vec3(1, 0, 0), col, step(0., heart));

18

19 return col;

20}

21

22void mainImage( out vec4 fragColor, in vec2 fragCoord )

23{

24 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

25 uv -= 0.5; // <-0.5,0.5>

26 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

27

28 vec3 col = drawScene(uv);

29

30 // Output to screen

31 fragColor = vec4(col,1.0);

32}Understanding the pow Function

You may be wondering why I created the sdHeart function in such a weird manner. Why not use the pow function that is available to us? The pow(x,y) function takes in a value, x, and raises it to the power of y.

If you tried using the pow function, you’ll see right away how odd the heart behaves.

1float sdHeart(vec2 uv, float size, vec2 offset) {

2 float x = uv.x - offset.x;

3 float y = uv.y - offset.y;

4 float group = pow(x,2.) + pow(y,2.) - size;

5 float d = pow(group,3.) - pow(x,2.) * pow(y,3.);

6

7 return d;

8}Well, that doesn’t look right 🤔. If you sent that to someone on Valentine’s Day, they might think it’s an inkblot test.

So why does the pow(x,y) function behave so strangely? If you look closer at the documentation for this function, then you’ll see that this function returns undefined if x is less than zero or if both x equals zero and y is less than or equal to zero.

Keep in mind that the implementation of the pow function varies by compiler and hardware, so you may not encounter this issue when developing shaders for other platforms outside Shadertoy, or you may experience different issues.

Because our coordinate system is set up to have negative values for x and y, we sometimes get undefined as a result of the pow function. In Shadertoy, the compiler will use undefined in mathematical operations which will then lead to confusing results.

We can experiment with how undefined behaves with different arithmetic operations by debugging the canvas using color. Let’s try adding a number to undefined:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

4 uv -= 0.5; // <-0.5,0.5>

5

6 vec3 col = vec3(pow(-0.5, 1.));

7 col += 0.5;

8

9 fragColor = vec4(col,1.0);

10 // Screen is gray which means undefined is treated as zero

11}Let’s try subtracting a number from undefined:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

4 uv -= 0.5; // <-0.5,0.5>

5

6 vec3 col = vec3(pow(-0.5, 1.));

7 col -= -0.5;

8

9 fragColor = vec4(col,1.0);

10 // Screen is gray which means undefined is treated as zero

11}Let’s try multiplying a number by undefined:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

4 uv -= 0.5; // <-0.5,0.5>

5

6 vec3 col = vec3(pow(-0.5, 1.));

7 col *= 1.;

8

9 fragColor = vec4(col,1.0);

10 // Screen is black which means undefined is treated as zero

11}Let’s try dividing undefined by a number:

1void mainImage( out vec4 fragColor, in vec2 fragCoord )

2{

3 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

4 uv -= 0.5; // <-0.5,0.5>

5

6 vec3 col = vec3(pow(-0.5, 1.));

7 col /= 1.;

8

9 fragColor = vec4(col,1.0);

10 // Screen is black which means undefined is treated as zero

11}From the observations we’ve gathered, we can conclude that undefined is treated as a value of zero when used in arithmetic operations. However, this could still vary by compiler and graphics hardware. Therefore, you need to be careful how you use the pow function in your shader code.

If you want to square a value, a common trick is to use the dot function to compute the dot product between a vector and itself. This lets us rewrite the sdHeart function to be a bit cleaner:

1float sdHeart(vec2 uv, float size, vec2 offset) {

2 float x = uv.x - offset.x;

3 float y = uv.y - offset.y;

4 float group = dot(x,x) + dot(y,y) - size;

5 float d = group * dot(group, group) - dot(x,x) * dot(y,y) * y;

6

7 return d;

8}Calling dot(x,x) is the same as squaring the value of x, but you don’t have to deal with the hassles of the pow function.

Using the sdStar5 SDF

Inigo Quilez has created many 2D SDFs and 3D SDFs that developers across Shadertoy utilize. In this section, I’ll discuss how we can use his 2D SDF list together with techniques we learned in Part 4 of my Shadertoy series to draw 2D shapes.

When creating shapes using SDFs, they are commonly referred to as “primitives” because they form the building blocks for creating more abstract shapes. For 2D, it’s pretty simple to draw shapes on the canvas, but it’ll become more complex when we discuss 3D shapes.

Let’s practice with a star SDF because drawing stars is always fun. Navigate to Inigo Quilez’s website and scroll down to the SDF called “Star 5 - exact”. It should have the following definition:

1float sdStar5(in vec2 p, in float r, in float rf)

2{

3 const vec2 k1 = vec2(0.809016994375, -0.587785252292);

4 const vec2 k2 = vec2(-k1.x,k1.y);

5 p.x = abs(p.x);

6 p -= 2.0*max(dot(k1,p),0.0)*k1;

7 p -= 2.0*max(dot(k2,p),0.0)*k2;

8 p.x = abs(p.x);

9 p.y -= r;

10 vec2 ba = rf*vec2(-k1.y,k1.x) - vec2(0,1);

11 float h = clamp( dot(p,ba)/dot(ba,ba), 0.0, r );

12 return length(p-ba*h) * sign(p.y*ba.x-p.x*ba.y);

13}Don’t worry about the in qualifiers in the function. You can remove them if you want, since in is the default qualifier if none is specified.

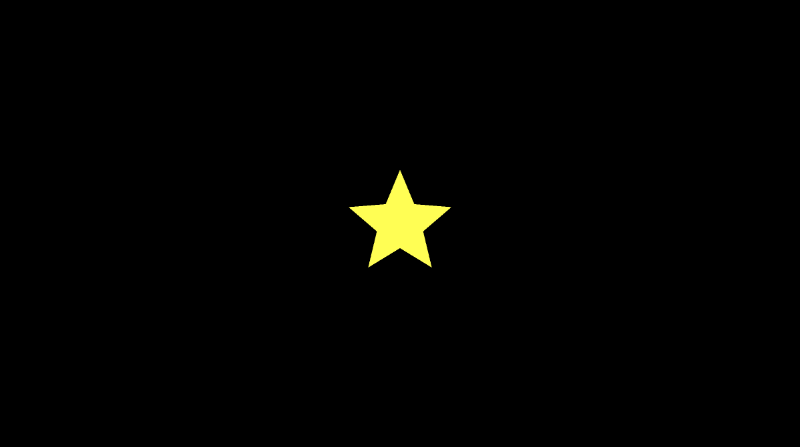

Let’s create a new Shadertoy shader with the following code:

1float sdStar5(in vec2 p, in float r, in float rf)

2{

3 const vec2 k1 = vec2(0.809016994375, -0.587785252292);

4 const vec2 k2 = vec2(-k1.x,k1.y);

5 p.x = abs(p.x);

6 p -= 2.0*max(dot(k1,p),0.0)*k1;

7 p -= 2.0*max(dot(k2,p),0.0)*k2;

8 p.x = abs(p.x);

9 p.y -= r;

10 vec2 ba = rf*vec2(-k1.y,k1.x) - vec2(0,1);

11 float h = clamp( dot(p,ba)/dot(ba,ba), 0.0, r );

12 return length(p-ba*h) * sign(p.y*ba.x-p.x*ba.y);

13}

14

15vec3 drawScene(vec2 uv) {

16 vec3 col = vec3(0);

17 float star = sdStar5(uv, 0.12, 0.45);

18

19 col = mix(vec3(1, 1, 0), col, step(0., star));

20

21 return col;

22}

23

24void mainImage( out vec4 fragColor, in vec2 fragCoord )

25{

26 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

27 uv -= 0.5; // <-0.5,0.5>

28 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

29

30 vec3 col = drawScene(uv);

31

32 // Output to screen

33 fragColor = vec4(col,1.0);

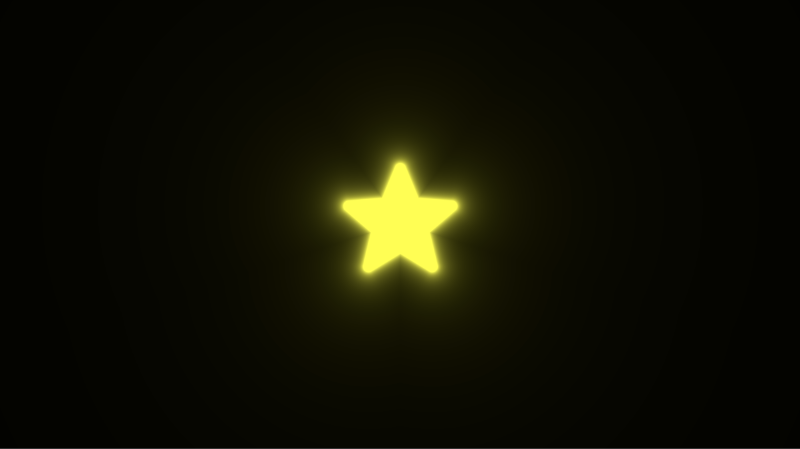

34}When we run this code, you should be able to see a bright yellow star! ⭐

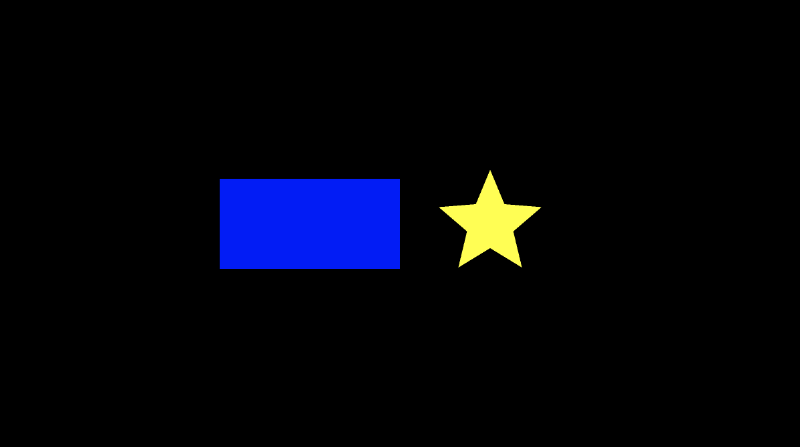

One thing is missing though. We need to add an offset at the beginning of the sdStar5 function by shifting the UV coordinates a bit. We can add a new parameter called offset, and we can subtract this offset from the vector, p, which represents the UV coordinates we passed into this function.

Our finished code should like this this:

1float sdStar5(in vec2 p, in float r, in float rf, vec2 offset)

2{

3 p -= offset; // This will subtract offset.x from p.x and subtract offset.y from p.y

4 const vec2 k1 = vec2(0.809016994375, -0.587785252292);

5 const vec2 k2 = vec2(-k1.x,k1.y);

6 p.x = abs(p.x);

7 p -= 2.0*max(dot(k1,p),0.0)*k1;

8 p -= 2.0*max(dot(k2,p),0.0)*k2;

9 p.x = abs(p.x);

10 p.y -= r;

11 vec2 ba = rf*vec2(-k1.y,k1.x) - vec2(0,1);

12 float h = clamp( dot(p,ba)/dot(ba,ba), 0.0, r );

13 return length(p-ba*h) * sign(p.y*ba.x-p.x*ba.y);

14}

15

16vec3 drawScene(vec2 uv) {

17 vec3 col = vec3(0);

18 float star = sdStar5(uv, 0.12, 0.45, vec2(0.2, 0)); // Add an offset to shift the star's position

19

20 col = mix(vec3(1, 1, 0), col, step(0., star));

21

22 return col;

23}

24

25void mainImage( out vec4 fragColor, in vec2 fragCoord )

26{

27 vec2 uv = fragCoord/iResolution.xy; // <0, 1>

28 uv -= 0.5; // <-0.5,0.5>

29 uv.x *= iResolution.x/iResolution.y; // fix aspect ratio

30

31 vec3 col = drawScene(uv);

32

33 // Output to screen

34 fragColor = vec4(col,1.0);

35}Using the sdBox SDF

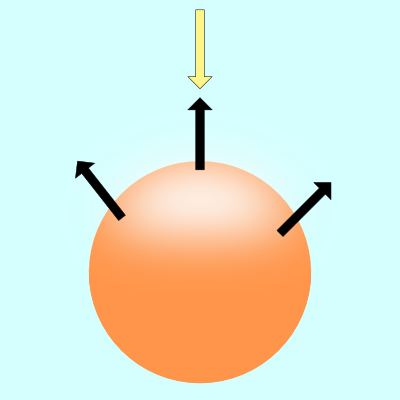

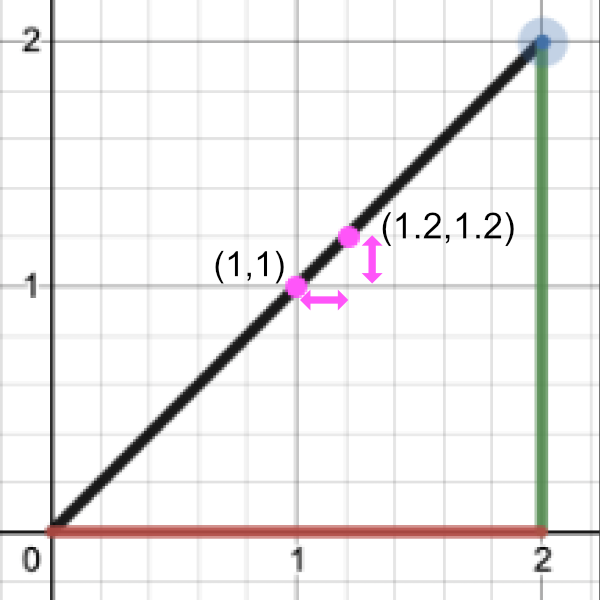

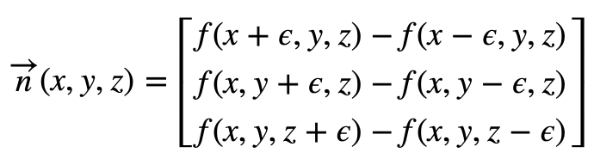

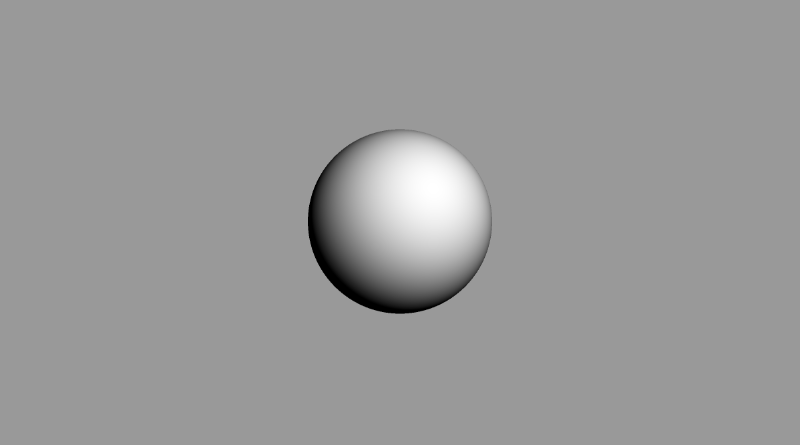

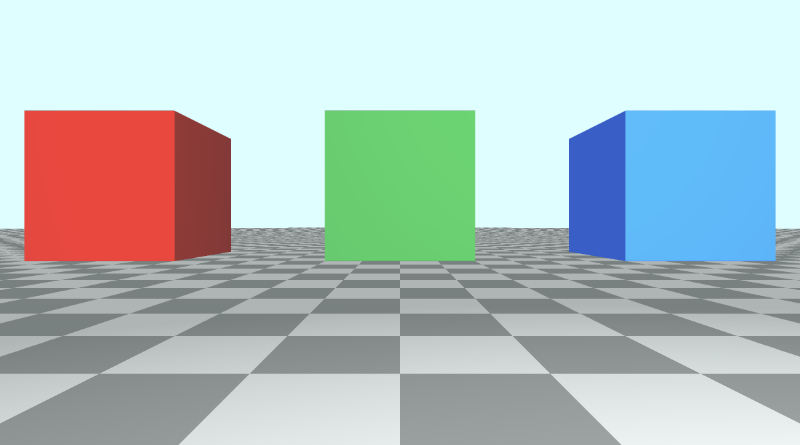

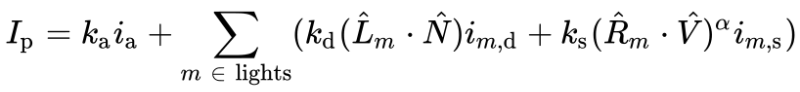

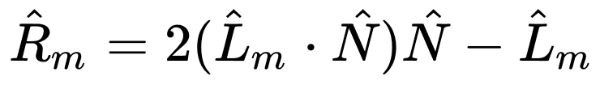

It’s quite common to draw boxes/rectangles, so we’ll select the SDF titled “Box - exact.” It has the following definition: