Rendering an Image of a 3D Scene: an Overview

It All Starts with a Computer and a Computer Screen

Introduction

The lesson Introduction to Raytracing: A Simple Method for Creating 3D Images provided you with a quick introduction to some important concepts in rendering and computer graphics in general, as well as the source code of a small ray tracer (with which we rendered a scene containing a few spheres). Ray tracing is a very popular technique for rendering a 3D scene (mostly because it is easy to implement and also a more natural way of thinking of the way light propagates in space, as quickly explained in lesson 1), however other methods exist. In this lesson, we will look at what rendering means, what sort of problems we need to solve to render an image of a 3D scene as well as quickly review the most important techniques that were developed to solve these problems specifically; our studies will be focused on the ray tracing and rasterization method, two popular algorithms used to solve the visibility problem (finding out which objects making up the scene is visible through the camera). We will also look at shading, the step in which the appearance of the objects as well as their brightness is defined.

It All Start with a Computer (and a Computer Screen)

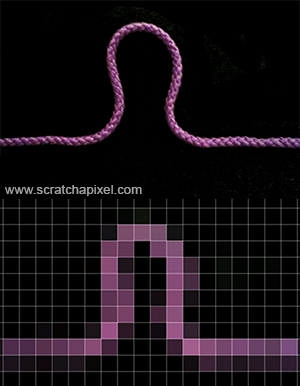

The journey in the world of computer graphics starts… with a computer. It might sound strange to start this lesson by stating what may seem obvious to you, but it is so obvious that we do take this for granted and never think of what it means when it comes to making images with a computer. More than a computer, what we should be concerned about is how we display images with a computer: the computer screen. Both the computer and the computer screen have something important in common. They work with discrete structures to the contrary of the world around us, which is made of continuous structures (at least at the macroscopic level). These discrete structures are the bit for the computer and the pixel for the screen. Let’s take a simple example. Take a thread in the real world. It is indivisible. But the representation of this thread onto the surface of a computer screen requires to “cut” or “break” it down into small pieces called pixels. This idea is illustrated in figure 1.

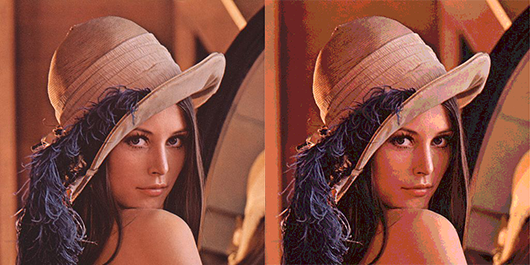

Figure 1: in the real world, everything is “continuous”. But in the world of computers, an image is made of discrete blocks, the pixels.

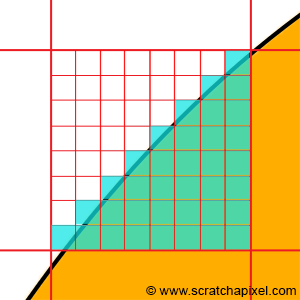

Figure 2: the process of representing an object on the surface of a computer can be seen as if a grid was laid out on the surface of the object. Every pixel of that grid overlapping the object is filled in with the color of the underlying object. But what happens when the object only partially overlaps the surface of a pixel? Which color should we fill the pixel with?

In computing, the process of actually converting any continuous object (a continuous function in mathematics, a digital image of a thread) is called discretization. Obvious? Yes and yet, most problems if not all problems in computer graphics come from the very nature of the technology a computer is based on: 0, 1, and pixels.

You may still think “who cares?”. For someone watching a video on a computer, it’s probably not very important indeed. But if you have to create this video, this is probably something you should care about. Think about this. Let’s imagine we need to represent a sphere on the surface of a computer screen. Let’s look at a sphere and apply a grid on top of it. The grid represents the pixels your screen is made of (figure 2). The sphere overlaps some of the pixels completely. Some of the pixels are also empty. However, some of the pixels have a problem. The sphere overlaps them only partially. In this particular case, what should we fill the pixel with: the color of the background or the color of the object?

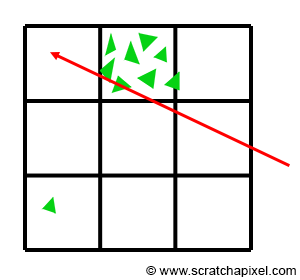

Intuitively you might think “if the background occupies 35% of the pixel area, and the object 75%, let’s assign a color to the pixel which is composed of the background color for 35% and of the object color for 75%”. This is pretty good reasoning, but in fact, you just moved the problem around. How do you compute these areas in the first place anyway? One possible solution to this problem is to subdivide the pixel into sub-pixels and count the number of sub-pixels the background overlaps and assume all over sub-pixels are overlapped by the object. The area covered by the background can be computed by taking the number of sub-pixels overlapped by the background over the total number of sub-pixels.

Figure 4: to approximate the color of a pixel which is both overlapping a shape and the background, the surface can be subdivided into smaller cells. The pixel’s color can be found by computing the number of cells overlapping the shape multiplied by the shape’s color plus the number of cells overlapping the background multiplied by the background color, divided by the entire number of cells. However, no matter how small the cells are, some of them will always overlap both the shape and the background.

However, no matter how small the sub-pixels are, there will always be some of them overlapping both the background and the object. While you might get a pretty good approximation of the object and background coverage that way (the smaller the sub-pixels the better the approximation), it will always just be an approximation. Computers can only approximate. Different techniques can be used to compute this approximation (subdividing the pixel into sub-pixels is just one of them), but what we need to remember from this example, is that a lot of the problems we will have to solve in computer sciences and computer graphics, comes from having to “simulate” the world which is made of continuous structures with discrete structures. And having to go from one to the other raises all sorts of complex problems (or maybe simple in their comprehension, but complex in their resolution).

Another way of solving this problem is also obviously to increase the resolution of the image. In other words, to represent the same shape (the sphere) using more pixels. However, even then, we are limited by the resolution of the screen.

Images and screens using a two-dimensional array of pixels to represent or display images are called raster graphics and raster displays respectively. The term raster more generally defines a grid of x and y coordinates on a display space. We will learn more about rasterization, in the chapter on perspective projection.

As suggested, the main issue with representing images of objects with a computer is that the object shapes need to be “broken” down into discrete surfaces, the pixels. Computers more generally can only deal with discrete data, but more importantly, the definition with which numbers can be defined in the memory of the computer is limited by the number of bits used to encode these numbers. The number of colors for example that you can display on a screen is limited by the number of bits used to encode RGB values. In the early days of computers, a single bit was used to encode the “brightness” of pixels on the screen. When the bit had the value 0 the pixel was black and when it was 1, the pixel would be white. The first generation of computers used color displays, encoded color using a single byte or 8 bits. With 8 bits (3 bits for the red channel, 3 bits for the green channel, and 2 bits for the blue channel) you can only define 256 distinct colors (2^3 * 2^3 * 2^2). What happens then when you want to display a color which is not one of the colors you can use? The solution is to find the closest possible matching color from the palette to the color you ideally want to display and display this matching color instead. This process is called color quantization.

Figure 5: our eyes can perceive very small color variations. When too few bits are used to encode colors, banding occurs (right).

The problem with color quantization is that when we don’t have enough colors to accurately sample a continuous gradation of color tones, continuous gradients appear as a series of discrete steps or bands of color. This effect is called banding (it’s also known under the term posterization or false contouring).

There’s no need to care about banding so much these days (the most common image formats use 32 bits to encode colors. With 32 bits you can display about 16 million distinct colors), however, keep in mind that fundamentally, colors and pretty much any other continuous function that we may need to represent in the memory of a computer, have to be broken down into a series of discrete or single quantum values for which precision is limited by the number of bits used to encode these values.

Figure 6: the shape of objects smaller than a pixel can’t be accurately captured by a digital image.

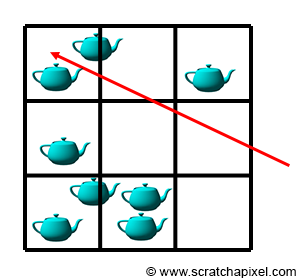

Finally, having to break down a continuous function into discrete values may lead to what’s known in signal processing and computer graphics as aliasing. The main problem with digital images is that the amount of details you can capture depends on the image resolution. The main issue with this is that small details (roughly speaking, details smaller than a pixel) can’t be captured by the image accurately. Imagine for example that you want to take a photograph with a digital camera of a teapot that is so far away though that the object is smaller than a pixel in the image (figure 6). A pixel is a discrete structure thus we can only fill it up with a constant color. If in this example, we fill it up with the teapot’s color (assuming the teapot has a constant color which is probably not the case if it’s shaded), your teapot will only show up as a dot in the image: you failed to capture the teapot’s shape (and shading). In reality, aliasing is far more complex than that, but you should know about the term and know for now and should keep in mind that by the very nature of digital images (because pixels are discrete elements), an image of a given resolution can only accurately represent objects of a given size. We will explain what the relationship between the objects size and the image resolution is in the lesson on Aliasing (which you can find in the Mathematics and Physic of Computer Graphics section).

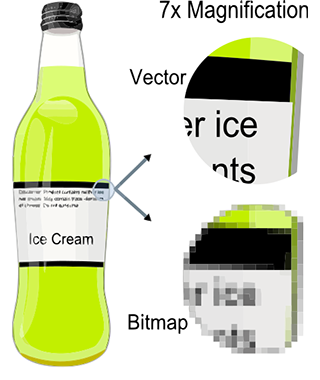

Images are just a collection of pixels. As mentioned before, when an image of the real world is stored in a digital image, shapes are broken down into discrete structures, the pixels. The main drawback of raster images (and raster screens) is that the resolution of the images we > can store or display is limited by the image or the screen resolution (its dimension in pixels). Zooming in doesn't reveal more details in the image. Vector graphics were designed to address this issue. With vector graphics, you do not store pixels but represent the shape of objects (and their colors) using mathematical expressions. That way, rather than being limited by the image resolution, the shapes defined in the file can be rendered on the fly at the desired resolution, producing an image of the object's shapes that is always perfectly sharp.

To summarize, computers work with quantum values when in fact, processes from the real world that we want to simulate with computers, are generally (if not always) continuous (at least at the macroscopic and even microscopic scale). And in fact, this is a very fundamental issue that is causing all sorts of very puzzling problems, to which a very large chunk of computer graphics research and theory is devoted.

Another field of computer graphics in which the discrete representation of the world is a particular issue is fluid simulation. The flow of fluids by their very nature is a continuous process, but to simulate the motion of fluids with a computer, we need to divide space into "discrete" structures generally small cubes called cells.

And It Follows With a 3D Scene

Before we can speak about rendering, we need to consider what we are going to render, and what we are looking at. If you have nothing to look at, there is nothing to render.

The real world is made of objects having a very wild variety of shapes, appearances, and structures. For example, what’s the difference between smoke, a chair, and water making up the ocean? In computer graphics, we generally like to see objects as either being solid or not. However, in the real world, the only thing that differentiates the two is the density of matter making up these objects. Smoke is made of molecules loosely connected and separated by a large amount of empty space, while wood making up a chair is made of molecules tightly packed into the smallest possible space. In CG though, we generally just need to define the object’s external shape (we will speak about how we render non-solid objects later on in this lesson). How do we do that?

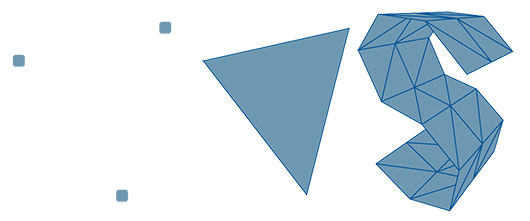

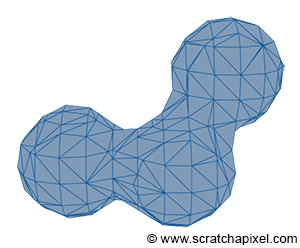

In the previous lesson, Where Do I Start? A Gentle Introduction to Computer Graphics Programming, we already introduced the idea that defining shape within the memory of a computer, we needed to start defining the concept of point in 3D space. Generally, a point is defined as three floats within the memory of a computer, one for each of the three-axis of the Cartesian coordinate system: the x-, y- and z-axis. From here, we can simply define several points in space and connect them to define a surface (a polygon). Note that polygons should always be coplanar which means that all points making up a face or polygon should lie on the same plane. With three points, you can create the simplest possible shape of all: a triangle. You will see triangles used everywhere especially in ray-tracing because many different techniques have been developed to efficiently compute the intersection of a line with a triangle. When faces or polygons have more than three points (also called vertices), it’s not uncommon to convert these faces into triangles, a process called triangulation.

Figure 1: the basic brick of all 3D objects is a triangle. A triangle can be created by connecting (in 2D or 3D) 3 points or vertices to each other. More complex shapes can be created by assembling triangles.

We will explain later in this lesson, why converting geometry to triangles is a good idea. But the point here is that the simplest possible surface or object you can create is a triangle, and while a triangle on its own is not very useful, you can though create more complex shapes by assembling triangles. In many ways, this is what modeling is about. The process is very similar to putting bricks together to create more complex shapes and surfaces.

Figure 2: to approximate a curved surface we sample the curve along the path of the curve and connect these samples.

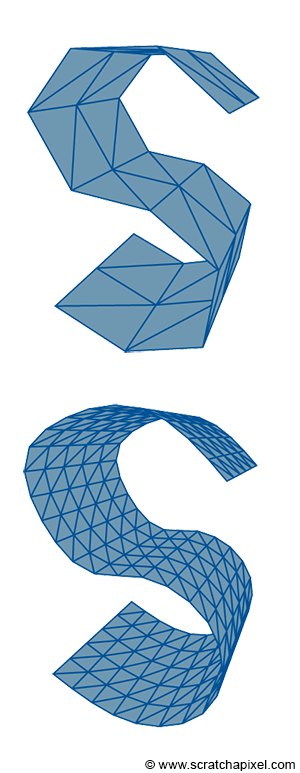

Figure 3: you can use more triangles to improve the curvature of surfaces, but the geometry will become heavier to render.

The world is not polygonal!

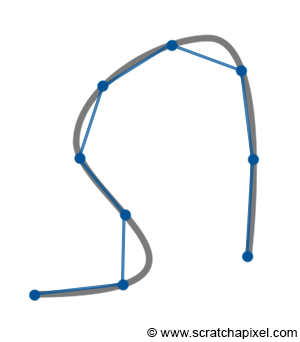

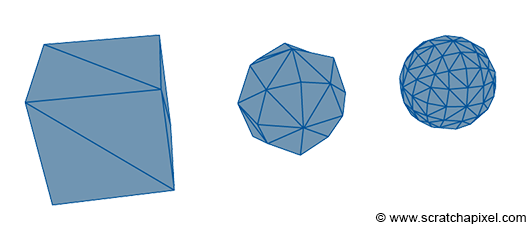

Most people new to computer graphics often ask though, how can curved surfaces be created from triangles, when a triangle is a flat and angular surface. First, the way we define the surface of objects in CG (using triangles or polygons) is a very crude representation of reality. What may seem like a flat surface (for example the surface of a wall) to our eyes, is generally an incredibly complex landscape at the microscopic level. Interestingly enough, the microscopic structure of objects has a great influence on their appearance, not on their overall shape. Something worth keeping in mind. But to come back to the main question, using triangles or polygons is indeed not the best way of representing curved surfaces. It gives a faceted look to objects, a little bit like a cut diamond (this facet look can be slightly improved with a technique called smooth shading, but smooth shading is just a trick we will learn about when we go to the lessons on shading). If you draw a smooth curve, you can approximate this curve by placing a few points along this curve and connecting these points with straight lines (which we call segments). To improve this approximation you can simply reduce the size of the segment (make them smaller) which is the same as creating more points along the curve. The process of actually placing points or vertices along a smooth surface is called sampling (the process of converting a smooth surface to a triangle mesh is called tessellation. We will explain further in this chapter how smooth surfaces can be defined). Similarly, with 3D shapes, we can create more and smaller triangles to better approximate curved surfaces. Of course, the more geometry (or triangles) we create, the longer it will take to render this object. This is why the art of rendering is often to find a tradeoff between the amount of geometry you use to approximate the curvature of an object and the time it takes to render this 3D model. The amount of geometric detail you put in a 3D model also depends on how close you will see this model in your image. The closer you are to the object, the more details you may want to see. Dealing with model complexity is also a very large field of research in computer graphics (a lot of research has been done to find automatic/adaptive ways of adjusting the number of triangles an object is made of depending on its distance to the camera or the curvature of the object).

In other words, it is impossible to render a perfect circle or a perfect sphere with polygons or triangles. However, keep in mind that computers work on discrete structures, as do monitors. There is no reason for a renderer to be able to perfectly render shapes like circles if they'll just be displayed using a raster screen in the end. The solution (which has been around for decades now) is simply to use triangles that are smaller than a pixel, at which point no one looking at the monitor can tell that your basic primitive is a triangle. This idea has been used very widely in high-quality rendering software such as Pixar's RenderMan, and in the past decade, it has appeared in real-time applications as well (as part of the tessellation process).

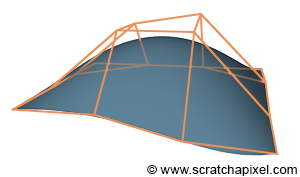

Figure 4: a Bezier patch and its control points which are represented in this image by the orange net. Note how the resulting surface is not passing through the control points or vertices (excepted at the edge of the surface which is a property of Bezier patches actually).

Figure 5: a cube turned into a sphere (almost a sphere) using the subdivision surface algorithm. The idea behind this algorithm is to create a smoother version of the original mesh by recursively subdividing it.

Polygonal meshes are easy which is why they are popular (most objects you see in CG feature films or video games are defined that way: as an assembly of polygons or triangles) however as suggested before, they are not great to model curved or organic surfaces. This became a particular issue when computers started to be used to design manufactured objects such as cars (CAD). NURBS or Subdivision surfaces were designed to address this particular shortcoming. They are based on the idea that points only define a control mesh from which a perfect curved surface can be computed mathematically. The surface itself is purely the result of an equation thus it can not be rendered directly (nor is the control mesh which is only used as an input to the algorithm). It needs to be sampled, similarly to the way we sampled the curve earlier on (the points forming the base or input mesh are usually called control points. One of the characteristics of these techniques is that the resulting surface, in general, does not pass through these control points). The main advantage of this approach is that you need fewer points (fewer compared to the number of points required to get a smooth surface with polygons) to control the shape of a perfectly smooth surface, which can then be converted to a triangular mesh smoother than the original input control mesh. While it is possible to create curved surfaces with polygons, editing them is far more time-consuming (and still less accurate) than when similar shapes can be defined with just a few points as with NURBS and Subdivision surfaces. If they are superior, why are they not used everywhere? They almost are. They are (slightly) more expansive to render than polygonal meshes because a polygonal mesh needs to be generated from the control mesh first (it takes an extra step), which is why they are not always used in video games (but many game engines such as the Cry Engine implement them), but they are in films. NURBS are slightly more difficult to manipulate overall than polygonal meshes. This is why artists generally use subdivision surfaces instead, but they are still used in design and CAD, where a high degree of precision is needed. Nurbs and Subdivisions surfaces will be studied in the Geometry section, however, in a further lesson in this section, we will learn about Bezier curves and surfaces (to render the Utah teapot), which in a way, are quite similar to NURBS.

NURBS and Subdivision surfaces are not similar. NURBS are indeed defined by a mathematical equation. They are part of a family of surfaces called parametric surfaces (see below). Subdivision surfaces are more the result of a 'process' applied to the input mesh, to smooth its surface by recursively subdividing it. Both techniques are detailed in the Geometry section.

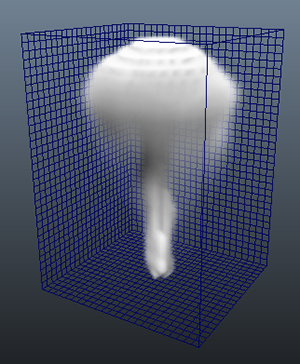

Figure 6: to represent fluids such as smoke or liquids, we need to store information such as the volume density in the cells of a 3D grid.

In most cases, 3D models are generated by hand. By hand, we mean that someone creates vertices in 3D space and connects them to make up the faces of the object. However, it is also possible to use simulation software to generate geometry. This is generally how you create water, smoke, or fire. Special programs simulate the way fluids move and generate a polygon mesh from this simulation. In the case of smoke or fire, the program will not generate a surface but a 3D dimensional grid (a rectangle or a box that is divided into equally spaced cells also called voxels). Each cell of this grid can be seen as a small volume of space that is either empty or occupied by smoke. Smoke is mostly defined by its density which is the information we will store in the cell. Density is just a float, but since we deal with a 3D grid, a 512x512x512 grid already consumes about 512Mb of memory (and we may need to store more data than just density such as the smoke or fire temperature, its color, etc.). The size of this grid is 8 times larger each time we double the grid resolution (a 1024x1024x1024 requires 4Gb of storage). Fluid simulation is computationally intensive, the simulation generates very large files, and rendering the volume itself generally takes more time than rendering solid objects (we need to use a special algorithm known as ray-marching which we will briefly introduce in the next chapters). In the image above (figure 6), you can see a screenshot of a 3D grid created in Maya.

When ray tracing is used, it is not always necessary to convert an object into a polygonal representation to render it. Ray tracing requires computing the intersection of rays (which are simply lines) with the geometry making up the scene. Finding if a line (a ray) intersects a geometrical shape, can sometimes be done mathematically. This is either possible because:

-

a geometric solution or,

-

an algebraic solution exists to the ray-object intersection test. This is generally possible when the shape of the object can be defined mathematically, with an equation. More generally, you can see this equation, as a function representing a surface (such as the surface of a sphere) overall space. These surfaces are called implicit surfaces (or algebraic surfaces) because they are defined implicitly, by a function. The principle is very simple. Imagine you have two equations:

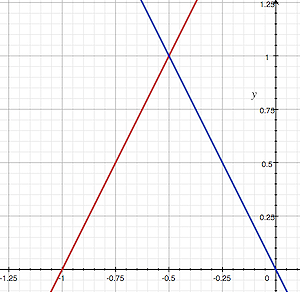

$$ \begin{array}{l} y = 2x + 2\\ y = -2x.\\ \end{array} $$You can see a plot of these two equations in the adjacent image. This is an example of a system of linear equations. If you want to find out if the two lines defined by these equations meet in one point (which you can see as an intersection), then they must have one x for which the two equations give the same y. Which you can write as:

$$ 2x + 2 = -2x. $$Solving for x, you get:

$$ \begin{array}{l} 4x + 2 = 0\\ 4x = -2\\ x = -\dfrac{1}{2}\\ \end{array} $$Because a ray can also be defined with an equation, the two equations, the equation of the ray and the equation defining the shape of the object, can be solved like any other system of linear equations. If a solution to this system of linear equations exists, then the ray intersects the object.

A very good and simple example of a shape whose intersection with a ray can be found using the geometric and algebraic method is a sphere. You can find both methods explained in the lesson Rendering Simple Shapes.

_What is the difference between parametric and implict surfaces_ Earlier on in the lesson, we mentioned that NURBS and Subdivision surfaces were also somehow defined mathematically. While this is true, there is a difference between NURBS and implicit surfaces (Subdivision surface can also be considered as a separate case, in which the base mesh is processed to produce a smoother and higher resolution mesh). NURBS are defined by what we call a parametric equation, an equation that is the function of one or several parameters. In 3D, the general form of this equation can be defined as follow: $$ f(u,v) = (x(u,v), y(u,v), z(u,v)). $$The parameters u and v are generally in the range of 0 to 1. An implicit surface is defined by a polynomial which is a function of three variables: x, y, and z.

$$ p(x, y, z) = 0. $$For example, a sphere of radius R centered at the origin is defined parametrically with the following equation:

$$ f(\theta, \phi) = (\sin(\theta)\cos(\phi), \sin(\theta)\sin(\phi), \cos(\theta)). $$Where the parameters u and v are actually being replaced in this example by (\theta) and (\phi) respectively and where (0 \leq \theta \leq \pi) and (0 \leq \phi \leq 2\pi). The same sphere defined implicitly has the following form:

$$ x^2 + y^2 + z^2 - R^2 = 0. $$

Figure 7: metaballs are useful to model organic shapes.

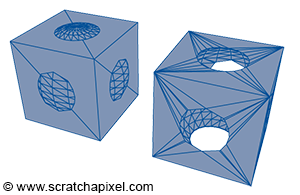

Figure 8: example of constructive geometry. The volume defined by the sphere was removed from the cube. You can see the two original objects on the left, and the resulting shape on the right.

Implicit surfaces are very useful in modeling but are not very common (and certainly less common than they used to be). It is possible to use implicit surfaces to create more complex shapes (implicit primitives such as spheres, cubes, cones, etc. are combined through boolean operations), a technique called constructive solid geometry (or CSG). Metaballs (invented in the early 1980s by Jim Blinn) is another form of implicit geometry used to create organic shapes.

The problem though with implicit surfaces is that they are not easy to render. While it’s often possible to ray trace them directly (we can compute the intersection of a ray with an implicit surface using an algebraic approach, as explained earlier), they first need to be converted to a mesh otherwise. The process of converting an implicit surface to a mesh is not as straightforward as with NURBS or Subdivision surface and requires a special algorithm such as the marching cube algorithm (proposed by Lorensen and Cline in 1987). It can also potentially lead to creating heavy meshes.

Check the section on Geometry, to read about these different topics in detail.

Triangle as the Rendering Primitive

In this series of lessons, we will study an example of an implicit surface with the ray-sphere intersection test. We will also see an example of a parametric surface, with the Utah teapot, which is using Bezier surfaces. However, in general, most rendering APIs choose the solution of actually converting the different geometry types to a triangular mesh and render the triangular mesh instead. This has several advantages. Supporting several geometry types such as polygonal meshes, implicitly or parametric surfaces requires writing a ray-object routine for each supported surface type. This is not only more code to write (with the obvious disadvantages it may have), but it is also difficult if you make this choice, to make these routines work in a general framework, which often results in downgrading the performance of the render engine.

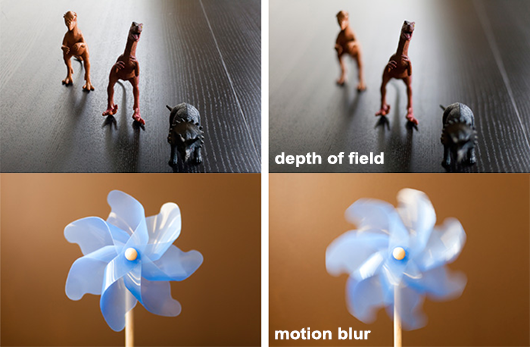

Keep in mind that rendering is more than just rendering 3D objects. It also needs to support many features such as motion blur, displacement, etc. Having to support many different geometry surfaces, means that each one of these surfaces needs to work with the entire set of supported features, which is much harder than if all surfaces are converted to the same rendering primitive, and if we make all the features work for this one single primitive only.

You also generally get better performances if you limit your code to rendering one primitive only because you can focus all your efforts to render this one single primitive very efficiently. Triangles have generally been the preferred choice for ray tracing. A lot of research has been done in finding the best possible (fastest/least instructions, least memory usage, and most stable) algorithm to compute the intersection of a ray with a triangle. However, other rendering APIs such as OpenGL also render triangles and triangles only, even though they don’t use the ray tracing algorithm. Modern GPUs in general, are designed and optimized to perform a single type of rendering based on triangles. Someone (humorously) wrote on this topic:

Because current GPUs are designed to work with triangles, people use triangles and so GPUs only need to process triangles, and so they’re designed to process only triangles.

Limiting yourself to rendering one primitive only, allows you to build common operations directly into the hardware (you can build a component that is extremely good at performing these operations). Generally, triangles are nice to work with for plenty of reasons (including those we already mentioned). They are always coplanar, they are easy to subdivide into smaller triangles yet they are indivisible. The maths to interpolate texture coordinates across a triangle are also simple (something we will be using later to apply a texture to the geometry). This doesn’t mean that a GPU could not be designed to render any other kind of primitives efficiently (such as quads).

_Can I use quads instead of triangles?_ The triangle is not the only possible primitive used for rendering. The quad can also be used. Modeling or surfacing algorithms such as those that generate subdivision surfaces only work with quads. This is why quads are commonly found in 3D models. Why wasting time triangulating these models if we could render quads as efficiently as triangles? It happens that even in the context of ray-tracing, using quads can sometimes be better than using triangles (in addition to not requiring a triangulation which is a waste when the model is already made out of quads as just suggested). Ray-tracing quads will be addressed in the advanced section on ray-tracing.

A 3D Scene Is More Than Just Geometry

Typically though a 3D scene is more than just geometry. While geometry is the most important element of the scene, you also need a camera to look at the scene itself. Thus generally, a scene description also includes a camera. And a scene without any light would be black, thus a scene also needs lights. In rendering, all this information (the description of the geometry, the camera, and the lights) is contained within a file called the scene file. The content of the 3D scene can also be loaded into the memory of a 3D package such as Maya or Blender. In this case, when a user clicks on the render button, a special program or plugin will go through each object contained in the scene, each light, and export the whole lot (including the camera) directly to the renderer. Finally, you will also need to provide the renderer with some extra information such as the resolution of the final image, etc. These are usually called global render settings or options.

Summary

What you should remember from this chapter is that we first need to consider what a scene is made of before considering the next step, which is to create an image of that 3D scene. A scene needs to contain three things: geometry (one or several 3D objects to look at), lights (without which the scene will be black), and a camera, to define the point of view from which the scene will be rendered. While many different techniques can be used to describe geometry (polygonal meshes, NURBS, subdivision surfaces, implicit surfaces, etc.) and while each one of these types may be rendered directly using the appropriate algorithm, it is easier and more efficient to only support one rendering primitive. In ray tracing and on modern GPUs, the preferred rendering primitive is the triangle. Thus, generally, geometry will be converted to triangular meshes before the scene gets rendered.

An Overview of the Rendering Process: Visibility and Shading

An image of a 3D scene can be generated in multiple ways, but of course, any way you choose should produce the same image for any given scene. In most cases, the goal of rendering is to create a photo-realistic image (non-photorealistic rendering or NPR is also possible). But what does it mean, and how can this be achieved? Photorealistic means essentially that we need to create an image so “real” that it looks like a photograph or (if photography didn’t exist) that it would look like reality to our eyes (like the reflection of the world off the surface of a mirror). How do we do that? By understanding the laws of physics that make objects appear the way they do, and simulating these laws on the computer. In other words, rendering is nothing else than simulating the laws of physics responsible for making up the world we live in, as it appears to us. Many laws are contributing to making up this world, but fewer contribute to how it looks. For example, gravity, which plays a role in making objects fall (gravity is used in solid-body simulation), has little to do with the way an orange looks like. Thus, in rendering, we will be interested in what makes objects look the way they do, which is essentially the result of the way light propagates through space and interacts with objects (or matter more precisely). This is what we will be simulating.

Perspective Projection and the Visibility Problem

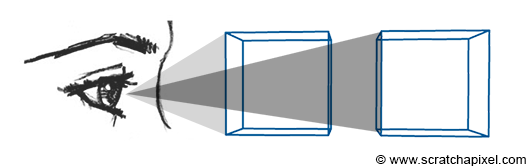

But first, we must understand and reproduce how objects look to our eyes. Not so much in terms of their appearance but more in terms of their shape and their size with respect to their distance to the eye. The human eye is an optical system that converges light rays (light reflected from an object) to a focus point.

Figure 1: the human eye is an optical system that converges light rays (light reflected from an object) to a focus point. As a result, by geometric construction, objects which are further away from our eyes, do appear smaller than those which are at close distance.

As a result, by geometric construction, objects which are further away from our eyes, appear smaller than those which are at a close distance (assuming all objects have the same size). Or to say it differently, an object appears smaller as we move away from it. Again this is the pure result of the way our eyes are designed. But because we are accustomed to seeing the world that way, it makes sense to produce images that have the same effect: something called the foreshortening effect. Cameras and photographic lenses were designed to produce images of that sort. More than simulating the laws of physics, photorealistic rendering, is also about simulating the way our visual system works. We need to produce images of the world on a flat surface, similar to the way images are created in our eyes (which is mostly the result of the way our eyes are designed - we are not too sure about how it works in the brain but this is not important for us).

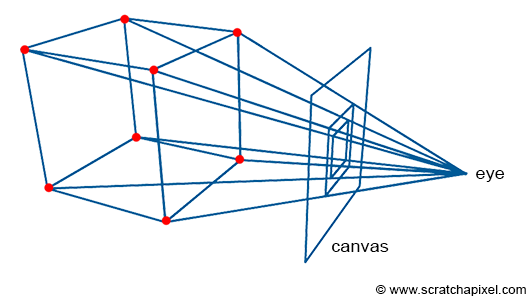

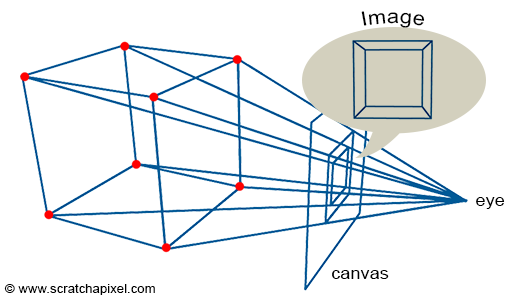

How do we do that? A basic method consists of tracing lines from the corner of objects to the eye and finding the intersection of these lines with the surface of an imaginary canvas (a flat surface on which the image will be drawn, such as a sheet of paper or the surface of the screen) perpendicular to the line of sight (Figure 2).

Figure 2: to create an image of the box, we trace lines from the corners of the object to the eye. We then connect the points where these lines intersect an imaginary plane (the canvas) to recreate the edges of the cube. This is an example of perspective projection.

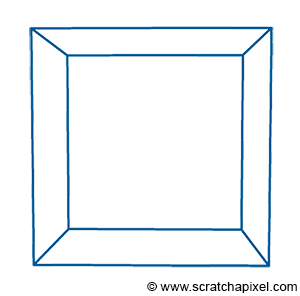

These intersection points can then be connected, to recreate the edges of the objects. The process by which a 3D point is projected onto the surface of the canvas (by the process we just described) is called perspective projection. Figure 3 shows what a box looks like when this technique is used to “trace” an image of that object on a flat surface (the canvas).

Figure 3: image of a cube created using perspective projection.

This sort of rendering in computer graphics is called a wireframe because only the edges of the objects are drawn. This image though is not photo-real. If the box was opaque, the front faces of the box (at most three of these faces) should occlude or hide the rear ones, which is not the case in this image (and if more objects were in the scene, they would potentially occlude each other). Thus, one of the problems we need to figure out in rendering is not only how we should be projecting the geometry onto the scene, but also how we should determine which part of the geometry is visible and which part is hidden, something known as the visibility problem (determining which surfaces and parts of surfaces are not visible from a certain viewpoint). This process in computer graphics is known under many names: hidden surface elimination, hidden surface determination (also known as hidden surface removal, occlusion culling, and visible surface determination. Why so many names? Because this is one of the first major problems in rendering, and for this particular reason, a lot of research was made in this area in the early ages of computer graphics (and a lot of different names were given to the different algorithms that resulted from this research). Because it requires finding out whether a given surface is hidden or visible, you can look at the problem in two different ways: do I design an algorithm that looks for hidden surfaces (and remove them), or do I design one in which I focus on finding the visible ones. Of course, this should produce the same image at the end but can lead to designing different algorithms (in which one might be better than the others).

The visibility problem can be solved in many different ways, but they generally fall within two main categories. In historical-chronological order:

- Rasterization,

- Ray-tracing.

Rasterization is not a common name, but for those of you who are already familiar with hidden surface elimination algorithms, it includes the z-buffer and painter’s algorithms among others. Almost all graphics cards (GPUs) use an algorithm from this category (likely z-buffering). Both methods will be detailed in the next chapter.

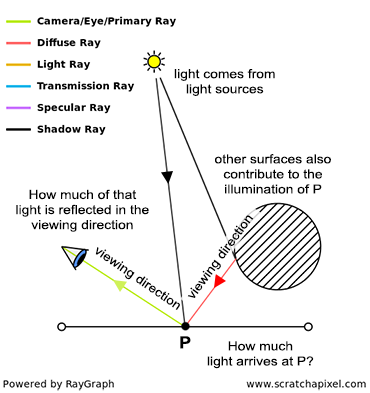

Shading

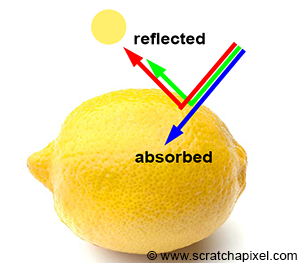

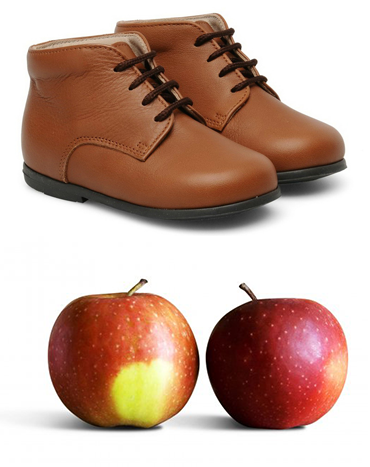

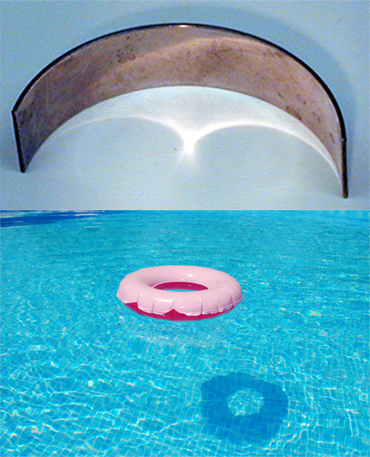

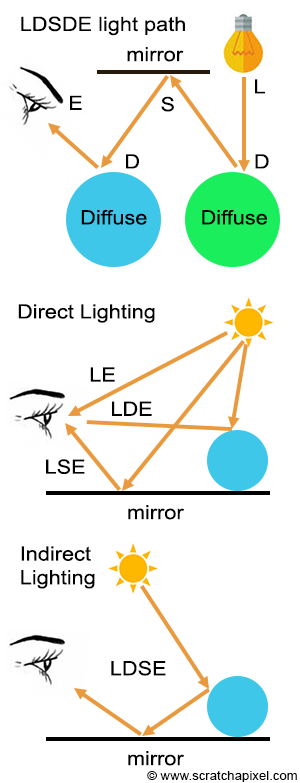

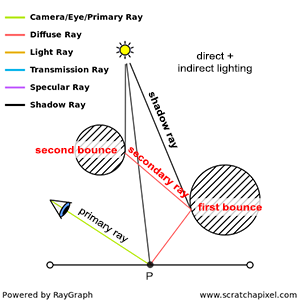

Even though we haven’t explained how the visibility problem can be solved, let’s assume for now that we know how to flatten a 3D scene onto a flat surface (using perspective projection) and determine which part of the geometry is visible from a certain viewpoint. This is a big step towards generating a photorealistic image but what else do we need? Objects are not only defined by their shape but also by their appearance (this time not in terms of how big they appear on the scene, but in terms of their look, color, texture, and how bright they are). Furthermore, objects are only visible to the human eye because light is bouncing off their surface. How can we define the appearance of an object? The appearance of an object can be defined as the way the material this object is made of, interacts with light itself. Light is emitted by light sources (such as the sun, a light bulb, the flame of a candle, etc.) and travels in a straight line. When it comes in contact with an object, two things might happen to it. It can either be absorbed by the object or it can be reflected in the environment. When light is reflected off the surface of an object, it keeps traveling (potentially in a different direction than the direction it came from initially) until it either comes in contact with another object (in which case the process repeats, light is either absorbed or reflected) or reach our eyes (when it reaches our eyes, the photoreceptors the surface of the eye is made of convert light into an electrical signal which is sent to the brain).

Figure 4: an object appears yellow under white light because it absorbs most of the blue light and reflects green and red light which combined to form a yellow color.

- Absorption gives objects their unique color. White light (check the lesson on color in the section Introduction to Computer Graphics) is composed of all colors making up the visible spectrum. When white light strikes an object, some of these light colors are absorbed while others are reflected. Mixed, these reflected colors define the color of the object. Under sunlight, if an object appears yellow, you can assume that it absorbs blue light and reflects a combination of red and green light, which combined form the yellow color. A black object absorbs all light colors. A white object reflects them all. The color of an object is unique to the way the material this object is made of absorbs light (it is a unique property of that material).

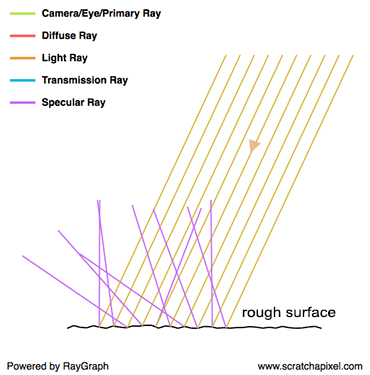

- Reflection. We already know that an object reflects light colors which it doesn’t absorb, but in which direction is this light reflected? It happens that the answer to this question is both simple and very complex. At the object level, light behaves no differently than a tennis ball when it bounces back from the surface of a solid object. It simply travels along a direction similar to the direction it came in but flipped around a vector perpendicular to the orientation of the surface at the impact point. In computer graphics, we call this direction a normal: the outgoing direction is a reflection of the incoming direction with respect to the normal. At the atomic level, when a photon interacts with an atom, the photon can either be absorbed or re-emitted by the atom in any new random direction. The re-emission of a photon by an atom is called scattering. We will speak about this term again in a very short while.

In CG, we generally won’t try to simulate the way light interacts with atoms, but the way it behaves at the object level. However, things are not that simple. Because if the maths involved in computing the new direction of a tennis ball bouncing off the surface of an object are simple, the problem is that surfaces at the microscopic level (not the atomic level) are generally not flat at all, which causes light to bounce in all sort of (almost random in some cases) directions. From the distance we generally look at common objects (a car, a pillow, a fruit), we don’t see the microscopic structure of objects, although it has a considerable impact on the way it reflects light and thus the way they look. However, we are not going to represent objects at the microscopic level, for obvious reasons (the amount of geometry needed would simply not fit within the memory of any conventional or non-conventional for that matter, computer). What do we do then? The solution to this problem is to come up with another mathematical model, for simulating the way light interacts with any given material at the microscopic level. This, in short, is the role played by what we call a shader in computer graphics. A shader is an implementation of a mathematical model designed to simulate the way light interacts with matter at the microscopic level.

Light Transport

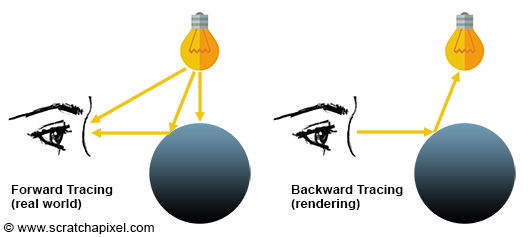

Rendering is mostly about simulating the way light travels in space. Light is emitted from light sources, and is reflected off the surface of objects, and some of that light eventually reaches our eyes. This is how and why we see objects around us. As mentioned in the introduction to ray tracing, it is not very efficient to follow the path of light from a light source to the eye. When a photon hits an object, we do not know the direction this photon will have after it has been reflected off the surface of the object. It might travel towards the eyes, but since the eye is itself very small, it is more likely to miss it. While it’s not impossible to write a program in which we simulate the transport of light as it occurs in nature (this method is called forward tracing), it is, as mentioned before, never done in practice because of its inefficiency.

Figure 5: in the real world, light travel travels from light sources (the sun, light bulbs, the flame of a candle, etc.) to the eye. This is called forward tracing (left). However, in computer graphics and rendering, it’s more efficient to simulate the path of light the other way around, from the eye to the object, to the light source. This is called backward tracing.

A much more efficient solution is to follow the path of light, the other way around, from the eye to the light source. Because we follow the natural path of light backward, we call this approach backward tracing.

Both terms are sometimes swapped in the CG literature. Almost all renderers follow light from the eye to the emission source. Because in computer graphics, it is the ‘default’ implementation, some people prefer to call this method, forward tracing. However, in Scratchapixel, we will use forward for when light goes from the source to the eye, and backward when we follow its path the other way around.

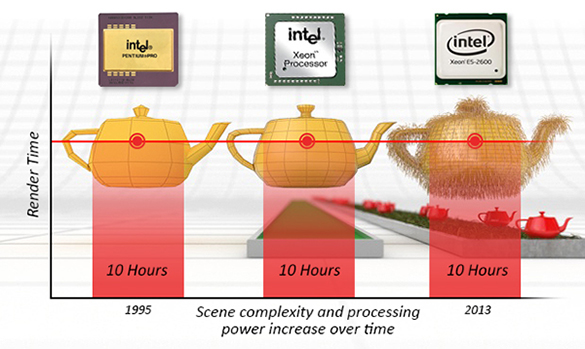

The main point here is that rendering is for the most part about simulating the way light propagates through space. This is not a simple problem, not because we don’t understand it well, but because if we were to simulate what truly happens in nature, there would be so many photons (or light particles) to follow the path of, that it would take a very long time to get an image. Thus in practice, we follow the path of very few photons instead, just to keep the render time down, but the final image is not as accurate as it would be if the paths of all photons were simulated. Finding a good tradeoff between photo-realism and render time is the crux of rendering. In rendering, a light transport algorithm is an algorithm designed to simulate the way light travels in space to produce an image of a 3D scene that matches “reality” as closely as possible.

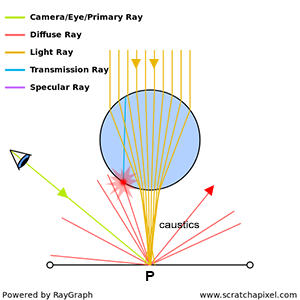

When light bounces off a diffuse surface and illuminates other objects around it, we call this effect indirect diffuse. Light can also be reflected off the surface of shiny objects, creating caustics (the disco ball effect). Unfortunately, it is very hard to come up with an algorithm capable of simulating all these effects at once (using a single light transport algorithm to simulate them all). It is in practice, often necessary to simulate these effects independently.

Light transport is central to rendering and is a very large field of research.

Summary

In this chapter, we learned that rendering can essentially be seen as an essential two steps process:

- The perspective projection and visibility problem on one hand,

- And the simulation of light (light transport) as well the simulation of the appearance of objects (shading) on the other.

Have you ever heard the term **graphics or rendering pipeline**? The term is more often used in the context of real-time rendering APIs (such as OpenGL, DirectX, or Metal). The rendering process as explained in this chapter can be decomposed into at least two steps, visibility, and shading. Both steps though can be decomposed into smaller steps or stages (which is the term more commonly used). Steps or stages are generally executed in sequential order (the input of any given stage generally depends on the output of the preceding stage). This sequence of stages forms what we call the rendering pipeline.

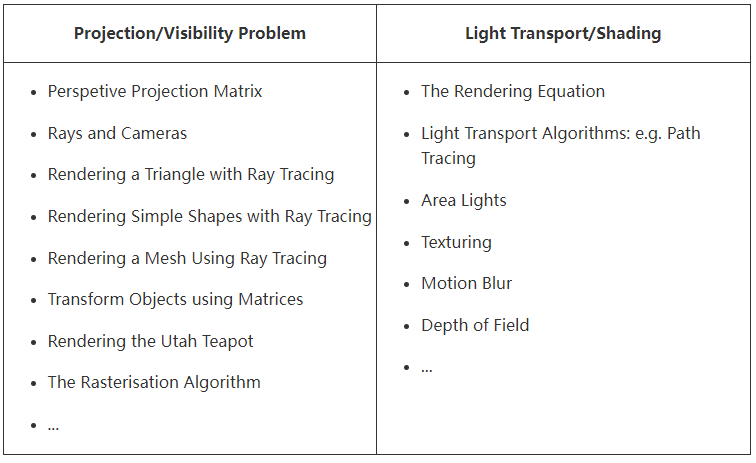

You must always keep this distinction in mind. When you study a particular technique always try to think whether it relates to one or the other. Most lessons from this section (and the advanced rendering section) fall within one of these categories:

We will briefly detail both steps in the next chapters.

Perspective Projection

In the previous chapter, we mentioned that the rendering process could be looked at as a two steps process:

- projecting 3D shapes on the surface of a canvas and determining which part of these surfaces are visible from a given point of view,

- simulating the way light propagates through space, which combined with a description of the way light interacts with the materials objects are made of, will give these objects their final appearance (their color, their brightness, their texture, etc.).

In this chapter, we will only review the first step in more detail, and more precisely explain how each one of these problems (projecting the objects’ shape on the surface of the canvas and the visibility problem) is typically solved. While many solutions may be used, we will only look at the most common ones. This is just an overall presentation. Each method will be studied in a separate lesson and an implementation of these algorithms provided (in a self-contained C++ program).

Going from 3D to 2D: the Projection Matrix

Figure 1: to create an image of a cube, we just need to extend lines from the corners of the object towards the eye and find the intersection of these lines with a flat surface (the canvas) perpendicular to the line of sight.

An image is just a representation of a 3D scene on a flat surface: the surface of a canvas or the screen. As explained in the previous chapter, to create an image that looks like reality to our brain, we need to simulate the way an image of the world is formed in our eyes. The principle is quite simple. We just need to extend lines from the corners of the object towards the eye and find the intersection of these lines with a flat surface perpendicular to the line of sight. By connecting these points to draw the edges of the object, we get a wireframe representation of the scene.

It is important to note, that this sort of construction is in a way a completely arbitrary way of flattening a three-dimensional world onto a two-dimensional surface. The technique we just described gives us what is called in drawing, a one-point perspective projection, and this is generally how we do things in CG because this is how the eyes and also cameras work (cameras were designed to produce images similar to the sort of images our eyes create). But in the art world, nothing stops you from coming up with totally different rules. You can in particular get images with several (two, three, four) points perspective.

One of the main important visual properties of this sort of projection is that an object gets smaller as it moves further away from the eye (the rear edges of a box are smaller than the front edges). This effect is called foreshortening.

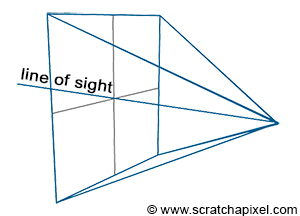

Figure 2: the line of sight passes through the center of the canvas.

Figure 3: the size of the canvas can be changed. Making it smaller reduces the field of view.

There are two important things to note about this type of projection. First, the eye is in the center of the canvas. In other words, the line of sight always passes through the middle of the image (figure 2). Note also that the size of the canvas itself is something we can change. We can more easily understand what the impact of changing the size of the canvas has if we draw the viewing frustum (figure 3). The frustum is the pyramid defined by tracing lines from each corner of the canvas toward the eye, and extending these lines further down into the scene (as far as the eye can see). It is also referred to as the viewing frustum or viewing volume. You can easily see that the only objects visible to the camera are those which are contained within the volume of that pyramid. By changing the size of the canvas we can either extend that volume or make it smaller. The larger the volume the more of the scene we see. If you are familiar with the concept of focal length in photography, then you will have recognized that this has the same effect as changing the focal length of photographic lenses. Another way of saying this is that by changing the size of the canvas, we change the field of view.

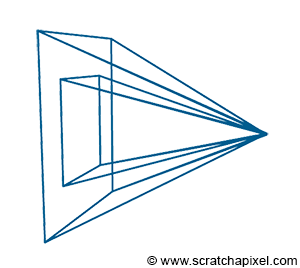

Figure 4: when the canvas becomes infinitesimally small, the lines of the frustum become orthogonal to the canvas. We then get what we call an orthographic projection. The game SimCity uses a form of orthographic view which gives it a unique look.

Something interesting happens when the canvas becomes infinitesimally small: the lines forming the frustum, end up parallel to each other (they are orthogonal to the canvas). This is of course impossible in reality, but not impossible in the virtual world of a computer. In this particular case, you get what we call an orthographic projection. It’s important to note that orthographic projection is a form of perspective projection, only one in which the size of the canvas is virtually zero. This has for effect to cancel out the foreshortening effect: the size of the edges of objects are preserved when projected to the screen.

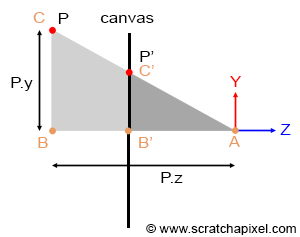

Figure 5: P’ is the projection of P on the canvas. The coordinates of P’ can easily be computed using the property of similar triangles.

Geometrically, computing the intersection point of these lines with the screen is incredibly simple. If you look at the adjacent figure (where P is the point projected onto the canvas, and P’ is this projected point), you can see that the angle \(\angle ABC\) and \(\angle AB’C’\) is the same. A is defined as the eye, AB is the distance of the point P along the z-axis (P’s z-coordinate), and BC is the distance of the point P along the y-axis (P’s y coordinate). B’C’ is the y coordinate of P’, and AB’ is the z-coordinate of P’ (and also the distance of the eye to the canvas). When two triangles have the same angle, we say that they are similar. Similar triangles have an interesting property: the ratio of the lengths of their corresponding sides is constant. Based on this property, we can write that:

$$ { BC \over AB } = { B'C' \over AB' } $$If we assume that the canvas is located 1 unit away from the eye (in other words that AB’ equals 1 (this is purely a convention to simplify this demonstration), and if we substitute AB, BC, AB’ and B’C’ with their respective points’ coordinates, we get:

$$ { BC \over AB } = { B'C' \over 1 } \rightarrow P'.y = { P.y \over P.z }. $$In other words, to find the y-coordinate of the projected point, you simply need to divide the point y-coordinate by its z-coordinate. The same principle can be used to compute the x coordinate of P’:

$$ P'.x = { P.x \over P.z }. $$This is a very simple yet extremely important relationship in computer graphics, known as the perspective divide or z-divide (if you were on a desert island and needed to remember something about computer graphics, that would probably be this equation).

In computer graphics, we generally perform this operation using what we call a perspective projection matrix. As its name indicates, it’s a matrix that when applied to points, projects them to the screen. In the next lesson, we will explain step by step how and why this matrix works, and learn how to build and use it.

But wait! The problem is that whether you need the perspective projection depends on the technique you use to sort out the visibility problem. Anticipating what we will learn in the second part of this chapter, algorithms for solving the visibility problem come into two main categories:

- Rasterization,

- Ray-tracing.

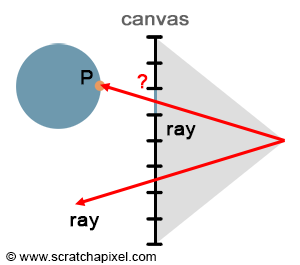

Algorithms of the first category rely on projecting P onto the screen to compute P’. For these algorithms, the perspective projection matrix is therefore needed. In ray tracing, rather than projecting the geometry onto the screen, we trace a ray passing through P’ and look for P. We don’t need to project P anymore with this approach since we already know P’, which means that in ray tracing, the perspective projection is technically not needed (and therefore never used).

We will study the two algorithms in detail in the next chapters and the next lessons. However, it is important to understand the difference between the two and how they work at this point. As explained before, the geometry needs to be projected onto the surface of the canvas. To do so, P is projected along an "implicit" line (implicit because we never really need to build this line as we need to with ray tracing) connecting P to the eye. You can see the process as if you were moving a point along that line from P to the eye until it lies on the canvas. That point would be P'. In this approach, you know P, but you don't know P'. You compute it using the projection approach. But you can also look at the problem the other way around. You can wonder whether, for any point on the canvas (say P' - which by default we will assume is in the center of the pixel), there is a point P on the surface of the geometry that projects onto P'. The solution to this problem is to explicitly this time create a ray from the eye to P', extend or project this ray down into the scene, and find out if this ray intersects any 3D geometry. If it does, then the intersection point is P. Hopefully, you can now see more distinctively the difference between rasterization (we know P, we compute P') and ray tracing (we know P', we look for P).

The advantage of the rasterization approach over ray tracing is mainly speed. Computing the intersection of rays with geometry is a computationally expensive operation. This intersection time also grows linearly with the amount of geometry contained in the scene, as we will see in one of the next lessons. On the other hand, the projection process is incredibly simple, relies on basic math operations (multiplications, divisions, etc.), and can be aggressively optimized (especially if special hardware is designed for this purpose which is the case with GPUs). Graphics cards are almost all using an algorithm based on the rasterization approach (which is one of the reasons they can render 3D scenes so quickly, at interactive frame rates). When real-time rendering APIs such as OpenGL or DirectX are used, the projection matrix needs to be dealt with. Even if you are only interested in ray tracing, you should know about it for at least a historical reason: it is one of the most important techniques in rendering and the most commonly used technique for producing real-time 3D computer graphics. Plus, it is likely at some point that you will have to deal with the GPU anyway, and real-time rendering APIs do not compute this matrix for you. You will have to do it yourself.

The concept of rasterization is really important in rendering. As we learned in this chapter, the projection of P onto the screen can be computed by dividing the point's coordinates x and y by the point's z-coordinate. As you may guess, all initial coordinates are real numbers - floats for instance - thus P' coordinates are also real numbers. However pixel coordinates need to be integers, thereby, to store the color of P's in the image, we will need to convert its coordinates to pixel coordinates - in other words from floats to integers. We say that the point's coordinates are converted from screen space to raster space. More information can be found on this process in the lesson on rays and cameras.

The next three lessons are devoted to studying the construction of the orthographic and perspective matrix, and how to use them in OpenGL to display images and 3D geometry.

The Visibility Problem

We already explained what the visibility problem is in the previous chapters. To create a photorealistic image, we need to determine which part of an object is visible from a given viewpoint. The problem is that when we project the corners of the box for example and connect the projected points to draw the edges of the box, all faces of the box are visible. However, in reality, only the front faces of the box would be visible, while the rear ones would be hidden.

In computer graphics, you can solve this problem using principally two methods: ray tracing and rasterization. We will quickly explain how they work. While it’s hard to know whether one method is older than the other, rasterization was far more popular in the early days of computer graphics. Ray tracing is notoriously more computationally expensive (and uses more memory) than rasterization, and thus is far slower in comparison. Computers back then were so slow (and had so little memory), that rendering images using ray tracing was not considered a viable option, at least in a production environment (to produce films for example). For this reason, almost every renderer used rasterization (ray tracing was generally limited to research projects). However, for reasons we will explain in the next chapter, ray tracing is way better than rasterization when it comes to simulating effects such as reflections, soft shadows, etc. In summary, it’s easier to create photo-realistic images with ray tracing, only it takes longer compared to rendering geometry using rasterization which in turn, is less adapted than ray tracing to simulate realistic shading and light effects. We will explain why in the next chapter. Real-time rendering APIs and GPUs are generally using rasterization because speed in real-time is obviously what determines the choice of the algorithm. What was true for ray tracing in the 80s and 90s is however not true today. Computers are now so powerful, that ray tracing is used by probably every offline renderer today (at least, they propose a hybrid approach in which both algorithms are implemented). Why? Because again it’s the easiest way of simulating important effects such as sharp and glossy reflections, soft shadows, etc. As long as the speed is not an issue, it is superior in many ways to rasterization (making ray tracing work efficiently though still requires a lot of work). Pixar’s PhotoRealistic RenderMan, the renderer Pixar developed to produce many of its first feature films (Toys Story, Nemo, Bug’s Life) was based on a rasterization algorithm (the algorithm is called REYES; it stands for Renders Everything You Ever Saw. It is by far considered one of the best visible surface determination algorithms ever conceived - The GPU rendering pipeline has many similarities with REYES). But their current renderer called RIS is now a pure ray tracer. Introducing ray tracing allowed the studio to greatly push the realism and complexity of the images it produced over the years.

Rasterisation to Solve the Visibility Problem: How Does it Work?

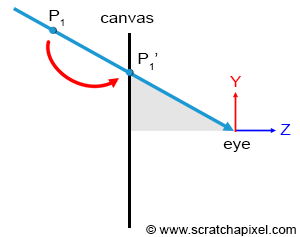

We hopefully clearly explained already the difference between rasterization and ray tracing (read the previous chapter). However let’s repeat, that we can look at the rasterization approach as if we were moving a point along a line connecting P, a point on the surface of the geometry, to the eye until it “lies” on the surface of the canvas. Of course, this line is only implicit, we never really need to construct it, but this is how intuitively we can interpret the projection process.

Figure 1: the projection process can be seen as if the point we want to project was moved down along a line connecting the point or the vertex itself to the eye. We can stop moving the point along that line when it lies on the plane of the canvas. Obviously, we don’t “slide” the point along this line explicitly, but this is how the projection process can be interpreted.

Figure 2: several points in the scene may project to the same point on the scene. The point visible to the camera is the one closest to the eye along the ray on which all points are aligned.

Remember that what we need to solve here is the visibility problem. In other words, there might be situations in which several points in the scene, P, P1, P2, etc. project onto the same point P’ onto the canvas (remember that the canvas is also the surface of the screen). However, the only point that is visible through the camera is the point along the line connecting the eye to all these points, which is the closest to the eye, as shown in Figure 2.

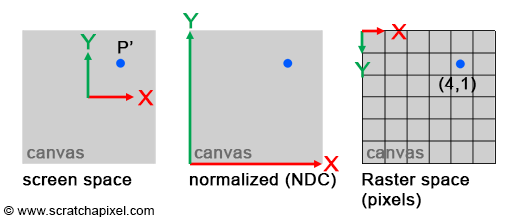

To solve the visibility problem, we first need to express P’ in terms of its position in the image: what are the coordinates of the pixel in the image, P’ falls onto? Remember that the projection of a point to the surface of the canvas gives another point P’ whose coordinates are real. However, P’ also necessarily falls within a given pixel of our final image. So how do we go from expressing P’s in terms of their position on the surface of the canvas, to defining it in terms of their position in the final image (the coordinates of the pixel in the image, P’ falls onto)? This involves a simple change of coordinate systems.

- The coordinate system in which the point is originally defined is called screen space (or image space). It is defined by an origin that is located in the center of the canvas. All axes of this two-dimensional coordinate system have unit length (their length is 1). Note that the x or y coordinate of any point defined in this coordinate system can be negative if it lies to the left of the x-axis (for the x-coordinate) or below the y-axis (for the y-coordinate).

- The coordinate system in which points are defined with respect to the grid formed by the pixels of the image, is called raster space. Its origin is generally located in the upper-left corner of the image. Its axes also have unit length and a pixel is considered to be one unit length in this coordinate system. Thus, the actual size of the canvas in this coordinate system is given by the image’s vertical (height) and horizontal (width) dimensions (which are expressed in terms of pixels).

Figure 3: computing the coordinate of a point on the canvas in terms of pixel values, requires to transform the points’ coordinates from screen to NDC space, and NDC space to raster space.

Converting points from screen space to raster space is simple. Because the coordinates P’ expressed in raster space can only be positive, we first need to normalize P’s original coordinates. In other words, convert them from whatever range they are originally in, to the range [0, 1] (when points are defined that way, we say they are defined in NDC space. NDC stands for Normalized Device Coordinates). Once converted to NDC space, converting the point’s coordinates to raster space is trivial: just multiply the normalized coordinates by the image dimensions, and round the number off to the nearest integer value (pixel coordinates are always round numbers, or integers if you prefer). The range P’ coordinates are originally in, depends on the size of the canvas in screen space. For the sake of simplicity, we will just assume that the canvas is two units long in each of the two dimensions (width and height), which means that P’ coordinates in screen space, are in the range [-1, 1]. Here is the pseudo-code to convert P’s coordinates from screen space to raster space:

1int width = 64, height = 64; //dimension of the image in pixels

2Vec3f P = Vec3f(-1, 2, 10);

3Vec2f P_proj;

4P_proj.x = P.x / P.z; //-0.1

5P_proj.y = P.y / P.z; //0.2

6// convert from screen space coordinates to normalized coordinates

7Vec2f P_proj_nor;

8P_proj_nor.x = (P_proj.x + 1) / 2; //(-0.1 + 1) / 2 = 0.45

9P_proj_nor.y = (1 - P_proj.y ) / 2; //(1 - 0.2) / 2 = 0.4

10// finally, convert to raster space

11Vec2i P_proj_raster;

12P_proj_raster.x = (int)(P_proj_nor.x * width);

13P_proj_raster.y = (int)(P_proj_nor.y * height);

14if (P_proj_raster.x == width) P_proj_raster.x = width - 1;

15if (P_proj_raster.y == height) P_proj_raster.y = height - 1;

This conversion process is explained in detail in the lesson 3D Viewing: the Pinhole Camera Model.

There are a few things to notice in this code. First that the original point P, the projected point in screen space, and NDC space all use the Vec3f or Vec2f types in which the coordinates are defined as real (floats). However, the final point in raster space uses the Vec2i type in which coordinates are defined as integers (the coordinate of a pixel in the image). Arrays in programming, are 0-indexed, thereby, the coordinates of a point in raster point should never be greater than the width of the image minus one or the image height minus one. However, this may happen when P’s coordinates in screen space are exactly 1 in either dimension. The code checks this case (lines 14-15) and clamps the coordinates to the right range if it happens. Also, the origin of the NDC space coordinate is located in the lower-left corner of the image, but the origin of the raster space system is located in the upper-left corner (see figure 3). Therefore, the y coordinate needs to be inverted when converted from NDC to raster space (check the difference between lines 8 and 9 in the code).

But why do we need this conversion? To solve the visibility problem we will use the following method:

-

Project all points onto the screen.

-

For each projected point, convert P’s coordinates from screen space to raster space.

-

Find the pixel the point maps to (using the projected point raster coordinates), and store the distance of that point to the eye, in a special list of points (called the depth list), maintained by that pixel.

-

_You say, project all points onto the screen. How do we find these points in the first place?"_ Very good question. Technically, we would break down the triangles or the polygons objects are made of, into smaller geometry elements no bigger than a pixel when projected onto the screen. In real-time APIs (OpenGL, DirectX, Vulkan, Metal, etc.) this is what we generally refer to as fragments. Check the lesson on the REYES algorithm in this section to learn how this works in more detail.

- At the end of the process, sort the points in the list of each pixel, by order of increasing distance. As a result of this process, the point visible for any given pixel in the image is the first point from that pixel’s list.

_Why do points need to be sorted according to their depth?_ The list needs to be sorted because points are not necessarily ordered in depth when projected onto the screen. Assuming you insert points by adding them at the top of the list, you may project a point B further from the eye than a point A, after you projected A. In which case B will be the first point in the list, even though its distance to the eye, is greater than the distance to A. Thus sorting is required.

An algorithm based on this approach is called a depth sorting algorithm (a self-explanatory name). The concept of depth ordering is the base of all rasterization algorithms. Quite a few exist among the most famous of which are:

- the z-buffering algorithm. This is probably the most commonly used one from this category. The REYES algorithm which we present in this section implements the z-buffer algorithm. It is very similar to the technique we described in which points on the surfaces of objects (objects are subdivided into very small surfaces or fragments which are then projected onto the screen), are projected onto the screen and stored into depth lists.

- the Painter algorithm

- Newell’s algorithm

- … (list to be extended)

Keep in mind that while this may sound like old fashion to you, all graphics cards are using one implementation of the z-buffer algorithm, to produce images. These algorithms (at least z-buffering) are still commonly used today.

Why do we need to keep a list of points? Storing the point with the shortest distance to the eye shouldn't require storing all the points in a list. Indeed, you could very well do the following thing:

- For each pixel in the image, set the variable z to infinity.

- For each point in the scene.

- Project the point and compute its raster coordinates

- If the distance from the current point to the eye z’ is smaller than the distance z stored in the pixel the point projects to, then update z with z’. If z’ is greater than z, then the point is located further away from the point currently stored for that pixel.

You can see that, you can get the same result without having to store a list of visible points and sorting them out at the end. So why did we use one? We used one because, in our example, we just assume that all points in the scene were opaque. But what happens if they are not fully opaque? If several semi-transparent points project to the same pixel, they may be visible throughout each other. In this particular case, it is necessary to keep track of all the points visible through that particular pixel, sort them out by distance, and use a special compositing technique (we will learn about this in the lesson on the REYES algorithm) to blend them correctly.

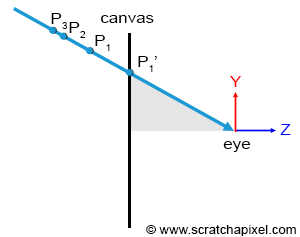

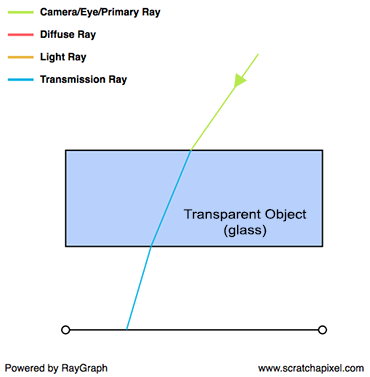

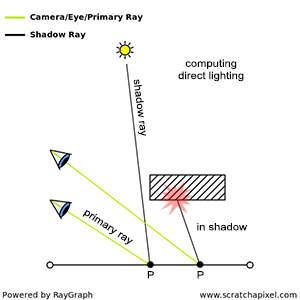

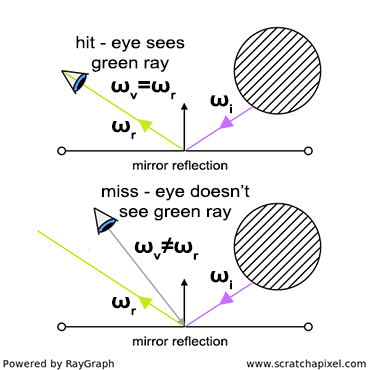

Ray Tracing to Solve the Visibility Problem: How Does It Work?

Figure 4: in raytracing, we explicitly trace rays from the eye down into the scene. If the ray intersects some geometry, the pixel the ray passes through takes the color of the intersected object.

With rasterization, points are projected onto the screen to find their respective position on the image plane. But we can look at the problem the other way around. Rather than going from the point to the pixel, we can start from the pixel and convert it into a point on the image plane (we take the center of the pixel and convert its coordinates defined in raster space to screen space). This gives us P’. We can then trace a ray starting from the eye, passing through P’, and extend it down into the scene (by default we will assume that P’ is the center of the pixel). If we find that the ray intersects an object, then we know that the point of intersection P is the point visible through that pixel. In short, ray tracing is a method to solve the point’s visibility problem, by the mean of explicitly tracing rays from the eye down into the scene.

Note that in a way, ray tracing and rasterization are a reflection of each other. They are based on the same principle, but ray tracing is going from the eye to the object, while rasterization goes from the object to the eye. While they make it possible to find which point is visible for any given pixel in the image (they give the same result in that respect), implementing them requires solving very different problems. Ray tracing is more complicated in a way because it requires solving the ray-geometry intersection problem. Do we even have a way of finding the intersection of a ray with geometry? While it might be possible to find a way of computing whether or not a ray intersects a sphere, can we find a similar method to compute the intersection of a ray with a cone for instance? And what about another shape, and what about NURBS, subdivision surfaces, and implicit surfaces? As you can see, ray tracing can be used as long as a technique exists to compute the intersection of a ray with any type of geometry a scene might contain (or your renderer might support).

Over the years, a lot of research was put into efficient ways of computing the intersection of rays with the simplest of all possible shapes - the triangle - but also directly ray tracing other types of geometry: NURBS, implicit surfaces, etc. However, one possible alternative to supporting all geometry types is to convert all geometry to a single geometry representation before the rendering process starts, and have the renderer only test the intersection of rays with that one geometry representation. Because triangles are an ideal rendering primitive, most of the time, all geometry is converted to triangles meshes, which means that rather than implementing a ray-object intersection test per geometry type, you only need to test for the intersection of rays with triangles. This has many advantages:

- First as suggested before, the triangle has many properties that make it very attractive as a geometry primitive. It’s co-planar, a triangle is indivisible (as creating more faces by connecting the existing vertices, as you would for faces having at least four or more vertices), but it can easily be subdivided into more triangles. Finally, the math for computing the barycentric coordinates of a triangle (which is used in texturing) is simple and robust.

- Because triangles are a good geometry primitive, a lot of research was done to find the best possible ray-triangle intersection test. What is a good ray triangle intersection algorithm? It needs to be fast (get to the result using as few operations as possible). It needs to use the least memory possible (some algorithms are more memory-hungry than others because they require storing precomputed variables on the triangle geometry). And it also needs to be robust (floating-point arithmetic issues are hard to avoid).

- From a coding point of view, supporting one single routine is far more advantageous than having to code many routines to handle all geometry types. Supporting triangles only simplifies the code in many places but also allows to design code that works best with triangles in general. This is particularly true when it comes to acceleration structures. Computing the intersection of rays with geometry is by far the most expensive operation in a ray tracer. The time it takes to test the intersection with all geometry in the scene grows linearly with the amount of geometry the scene contains. As soon as the scene contains even just hundreds of such primitives it becomes necessary to implement strategies to quickly discard sections of the scene, which we know have no chances to be intersected by the ray, and test for only subsections of the scene that the ray will potentially intersect. These strategies save a considerable amount of time and are generally based on acceleration structures. We will study acceleration structures in the section devoted to ray tracing techniques. Also, it’s worth noticing that specially designed hardware has been already built in the past, to handle the ray-triangle intersection test specifically, allowing complex scenes to run near real-time using ray tracing. It’s quite obvious that in the future, graphics cards will natively support the ray-triangle intersection test and that video games will evolve towards ray tracing.

Comparing rasterization and ray-tracing